+ User Guide Overview

The QuantaStor Users Guide focuses on how to configure your hosts to access storage volumes (iSCSI targets) in your QuantaStor storage system. The first couple sections cover how to configure your iSCSI initiator to login and access your volumes. The second section goes over how to configure your multi-path driver.

Contents

- 1 NFS Configuration

- 2 VMware Configuration

- 3 XenServer Configuration

- 4 Virtual Storage Appliance iSCSI Configuration

- 4.1 Configuring Your SAN for the VSA

- 4.2 Configuration Summary

- 4.3 Installing the iSCSI Initiator service on the VSA

- 4.4 Adding a Host entry for the VSA into the PSA

- 4.5 Logging into the PSA target storage volume from the VSA

- 4.6 Verifying that the Storage is Available

- 4.7 Importing the Storage into QuantaStor

- 4.8 Persistent Logins / Automatic iSCSI Login after Reboot

- 4.9 XenServer NIC Optimization

- 4.10 Troubleshooting

NFS Configuration

This section outlines how to setup your servers/hosts to access QuantaStor Network Shares via the NFS protocol.

Access Control / Permissions Configuration

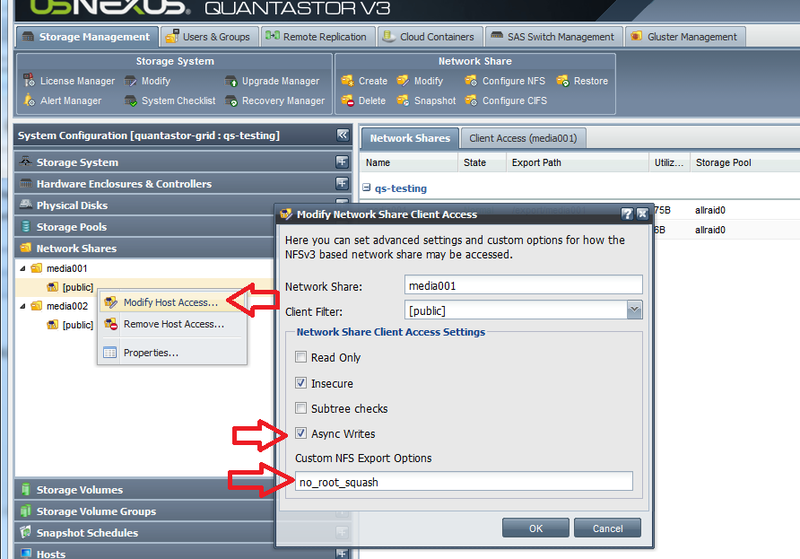

Unlike CIFS, access control via NFS is managed by providing access to a specific set of IP addresses. The default permissions for new Network Shares is to provide public access from all IP addresses which is shown in the QuantaStor web UI as a [public] network share client entry. The default access control configuration is also to "squash" rights from remote "root" user accounts to the "nobody" user ID as a security precaution. This may cause problems with some applications, and in those cases you can apply the 'no_root_squash' custom option to your network share. Also, if you've changed it to NFS v4 then the 'no_root_squash' option may also be required unless you've manually configured the system to use Kerberos user authentication.

no_root_squash Configuration

To configure the 'no_root_squash' option first go the the QuantaStor web management interface and expand the tree where you see Network Shares. In this example, 'media001' and under there you'll see a host access entry called \[public\]. Right-click on that and choose 'Modify Host Access..'. In this screen input no_root_squash into the Custom NFS Export Options section. This will allow you to modify files in the share. Second you'll probably want to enable 'Async Writes' which will boost performance a bit. Once you're done click 'OK'.

NFSv4 Mount Procedure

Continuing the above example, if you've switched from NFSv3 mode to NFSv4 you'll need to unmount the share if you already have it mounted then re-mount the share:

umount /media001

Now to mount the share with nfs4 mode, mount it like so:

mount -t nfs4 192.168.0.103:/media001 /media001

Note that we've added the -t nfs4 option, and second we have excluded the /export prefix to the mount path. That's important, do not try mounting a nfs4 network share with the /export prefix. It may work for awhile but you will inevitably have problems with this.

Resolving Stale File Handle issue on older RedHat / CentOS 5.x systems

We've seen some problems with the NFS client that comes with Centos 5.5 which leads to the NFS connection dropping. After which you'll see 'Stale File Handle' errors when you try to mount the share. The quick fix for this is to login to your QuantaStor system then run this command:

sudo /opt/osnexus/quantastor/bin/nfskeepalive --cron

This generates a small amount of periodic activity on the active shares so that the connections do not get dropped and you don't get stale file handles. CentOS 5.5 has the 2.6.18 kernel which is several years old now and we don't see this problem with the newer kernels used in CentOS 6.x.

RedHat / CentOS 6.x NFS Configuration

To setup your system to communicate with QuantaStor over NFS you'll first need to login as root and install a couple of packages like so:

yum install nfs-utils nfs-utils-lib

Once NFS is installed you can now mount the Network Shares that you've created in your QuantaStor system. To do this first create a directory to mount the share to. In this example our share name is 'media001'.

mkdir /media001

Now we can mount the share to that directory by providing the IP address of the Quantastor system (192.168.0.103 in this example) and the name of the share with the /export prefix like so:

mount 192.168.0.103:/export/media001 /media001

Ubuntu / Debian NFS Configuration

The process for configuring NFS with Ubuntu and Debian is the same as above only the package installation is different. To install the NFS client packages issue these commands at the console to get started then follow the same instructions given above for mounting shares for CentOS:

sudo -i apt-get update apt-get install nfs-common

VMware Configuration

Creating VMware Datastore

Using Fibre Channel

Setting up a datastore in VMware using fibre channel takes just a few simple steps.

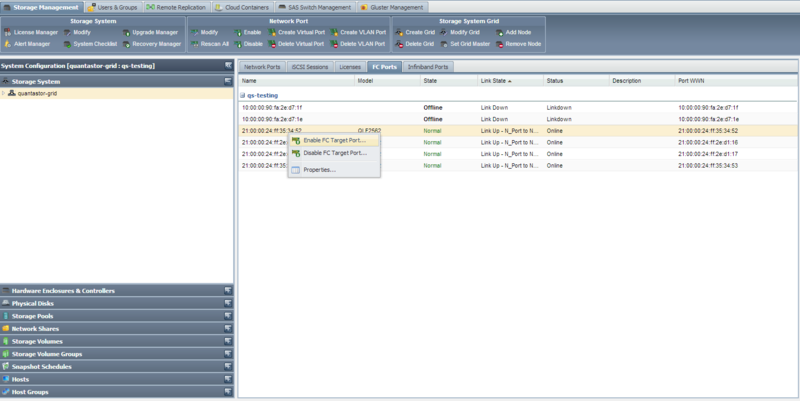

- First verify that the fibre channel ports are enabled from within QuantaStor. You can enable them by right clicking on the port and selecting 'Enable FC Target Port'.

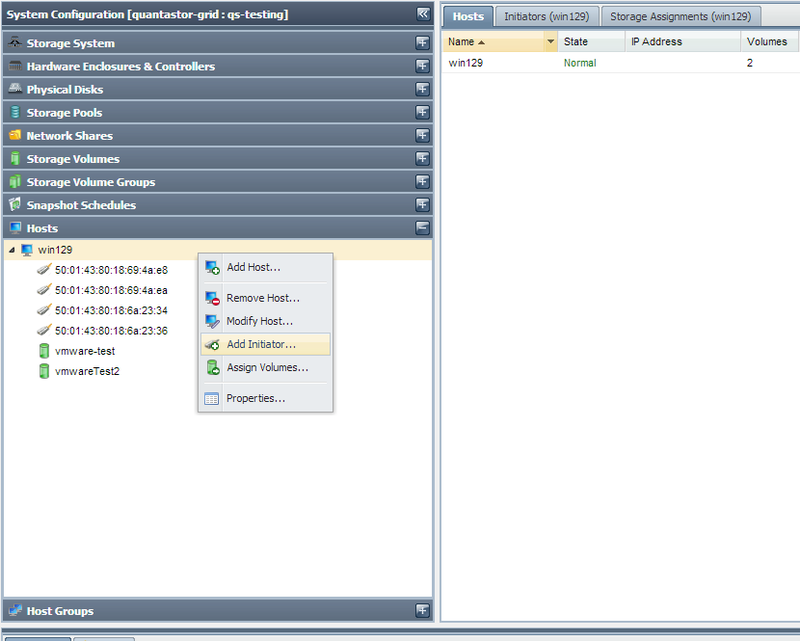

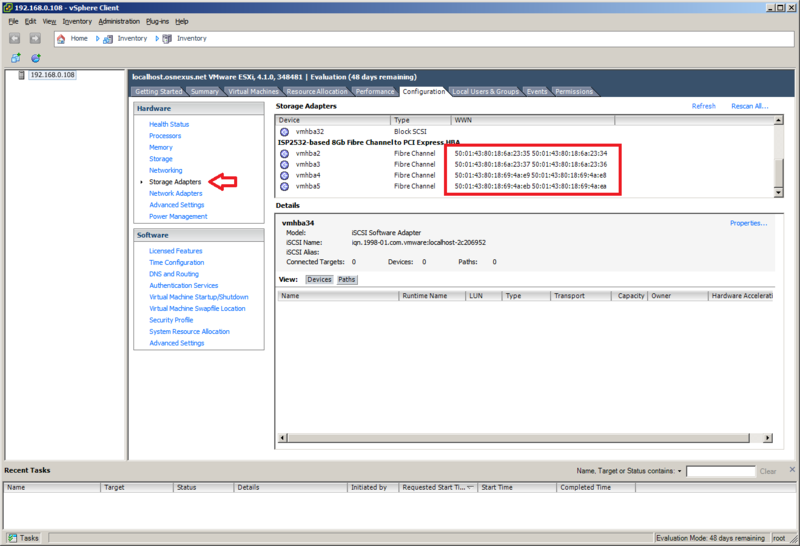

- Create a host entry within QuantaStor, and add all of the WWNs for the fibre channel adapters. To add more than one initiator to the QuantaStor host entry, right click on the host and select 'Add Initiator'. The WNNs can be found in the 'Configuration' tab, under the 'Storage Adapters' section.

- Now add the desired storage volume to the host you just created. This storage volume will be the Datastore that is within VMware.

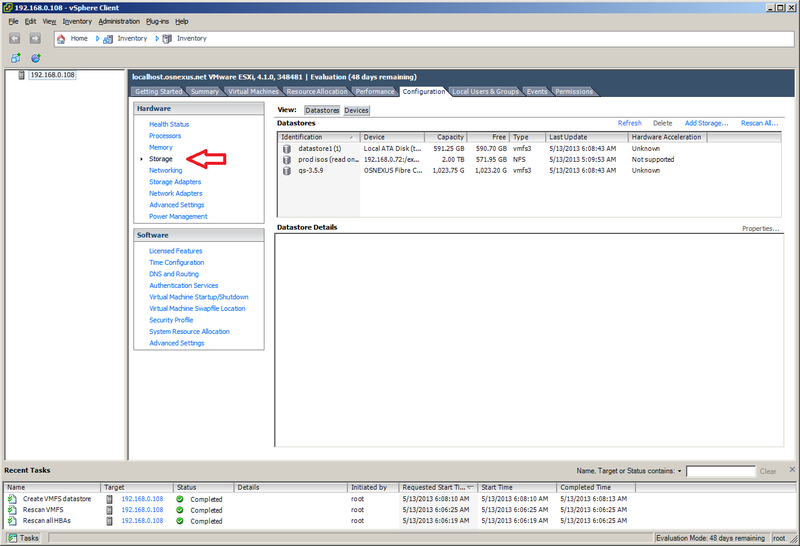

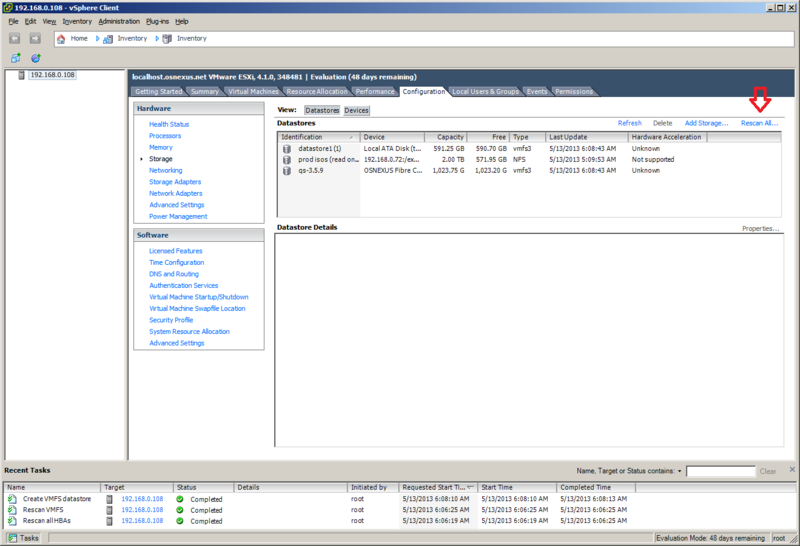

- Next, navigate to the 'Storage' section of the 'Configuration' tab. From within here we will want to select 'Rescan All...'. In the rescan window that pops up, make sure that 'Scan for New Storage Devices' is selected and click 'OK'.

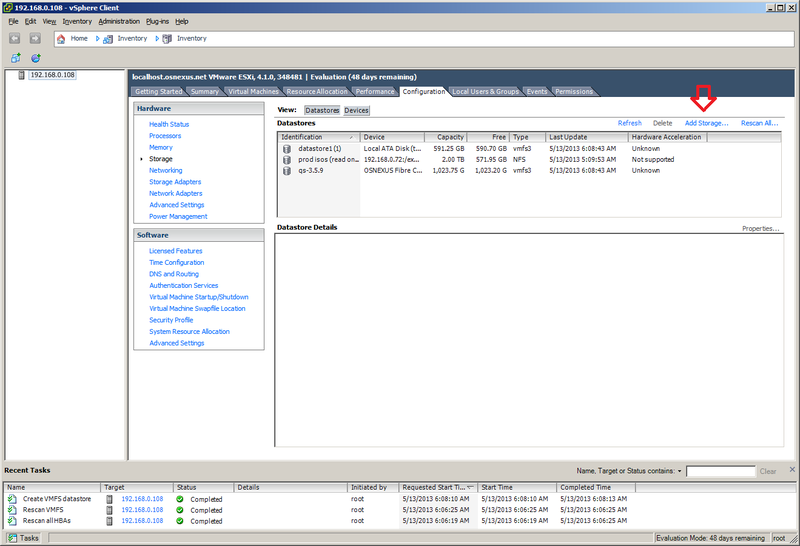

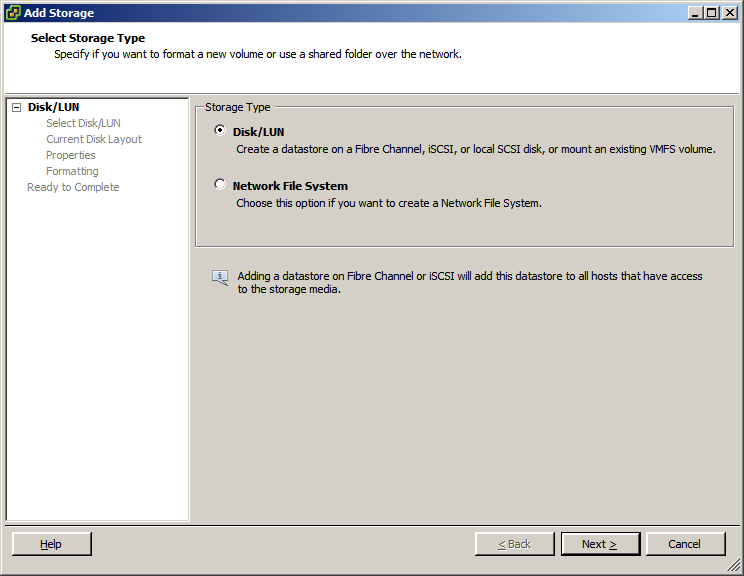

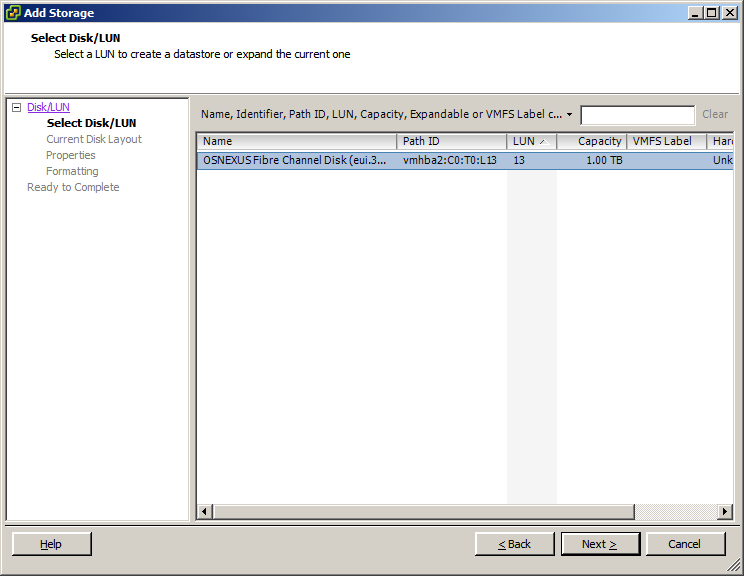

- After the scan is finished (you can see the progress of the task at the bottom of the screen), select the 'Add Storage...' option. Make sure that 'Disk/LUN' is selected and click next. If everything is configured correctly you should now see the storage volume that was assigned before. Select the storage volume and finish the wizard.

You should now be able to use the storage volume that was assigned from within QuantaStor as a datastore on the VMware server.

Using iSCSI

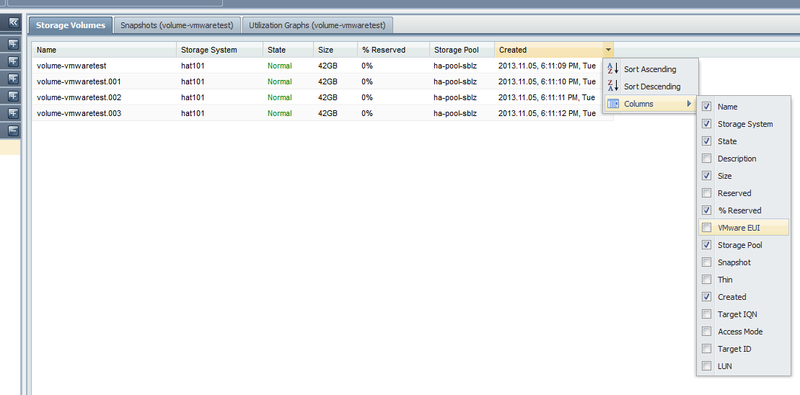

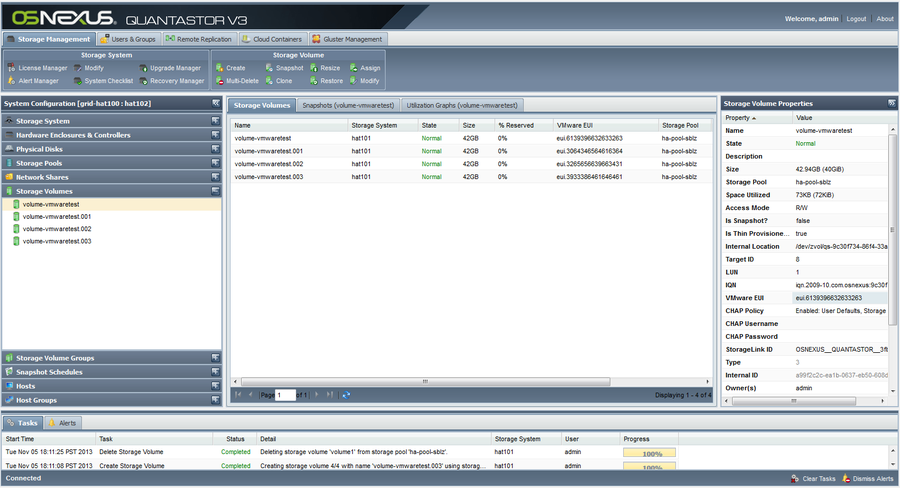

The VMware EUI unique identifier for any given volume can be found in the properties page for the Storage Volume in the QuantaStor web management interface. There is also a column you can activate as shown in this screenshot.

This screen shows a list of Storage Volumes and their associated VMware EUIs which you can use to correlate the Storage Volumes with your iSCSI device list in VMware vSphere.

Here is a video explaining the steps on how to setup a datastore using iSCSI. Creating a VMware vSphere iSCSI Datastore with QuantaStor Storage

Using NFS

Here is a video explaining the steps on how to setup a datastore using NFS. Creating a VMware datastore using an NFS share on QuantaStor Storage

XenServer Configuration

Creating XenServer Storage Repositories (SRs)

Generally speaking, there are three types of XenServer storage repositories (SRs) you can use with QuantaStor.

- iSCSI Software SR

- StorageLink SR

- NFS SR

The 'iSCSI Software SR' which allows you to assign a single QuantaStor storage volume to XenServer and then XenServer will create multiple virtual disks (VDIs) within that one storage volume/LUN. This route is easy to setup but it has some drawbacks:

**These pros and cons are outdated**

iSCSI Software SR

- Pros:

- Easy to setup

- Cons:

- The disk is formatted and laid out using LVM so the disk cannot be easily accessed outside of XenServer

- The custom LVM disk layout makes it so that you cannot easily migrate the VM to a physical machine or other hypervisors like Hyper-V & VMWare.

- No native XenServer support for hardware snapshots & cloning of VMs

- Potential for spindle contention problems which reduces performance when the system is under high load.

- Within QuantaStor manager you only see a single volume so you cannot setup custom snapshot schedules per VM and cannot roll-back a single VM from snapshot.

The second and most preferred route is to create your VMs using a Citrix StorageLink SR. With StorageLink there is a one-to-one mapping of a QuantaStor storage volume to each virtual disk (VDI) seen by each XenServer virtual machine.

Citrix StorageLink SR

- Pros:

- Each VM is on a separate LUN(s)/storage volume(s) so you can setup custom snapshot policies

- Leverage hardware snapshots and cloning to rapidly deploy large numbers of VMs

- Enables migration of VMs between XenServer and Hyper-V using Citrix StorageLink

- Enables one to setup custom off-host backup policies and scripts for each individual VM

- Easier migration of VMs between XenServer resource pools

- VM Disaster Recovery support via StorageLink (requires QuantaStor Platinum Edition)

- Cons:

- Requires a Windows host or VM to run StorageLink on.

- Some additional licensing costs to run Citrix Essentials depending on your configuration.

- (A free version exists which supports 1 storage system)

With the next version of Citrix StorageLink we'll be directly integrated so it will be just as easy to get going using the StorageLink SR if not easier as it will do all the array side configuration for you automatically.

XenServer NFS Storage Repository Setup & Configuration

XenServer iSCSI Storage Repository Setup & Configuration

Creating a XenServer Software iSCSI SR

Now that we've discussed the pros/cons, let's get down to creating a new SR. We'll start with the traditional "one big LUN" iSCSI Software SR. The name is perhaps a little misleading, but it's called a 'Software' SR because Citrix is trying to make clear that the iSCSI software initiator is utilized to connect to your storage hardware. Here's a summary of the steps you'll need to take within the QuantaStor Manager web interface before you create the SR.

- Login to QuantaStor Manager

- Create a storage volume that is large enough to fit all the VMs you're going to create.

- Note: If you use a large thin-provisioned volume that will give you the greatest flexibility.

- Add a host for each node in your XenServer resource pool

- Note: You'll want to identify each XenServer host by it's IQN. See the troubleshooting section for a picture of XenCenter showing a server's initiator IQN.

- (optional) Create a host group that combines all the Hosts

- Assign the storage volume to the host group

Alternatively you can assign the storage to each host individually but you'll save a lot of time by assigning it to a host group and it makes it easier to create more SRs down the road.

After you have the storage volume created and assigned to your XenServer hosts, you can now create the SR.

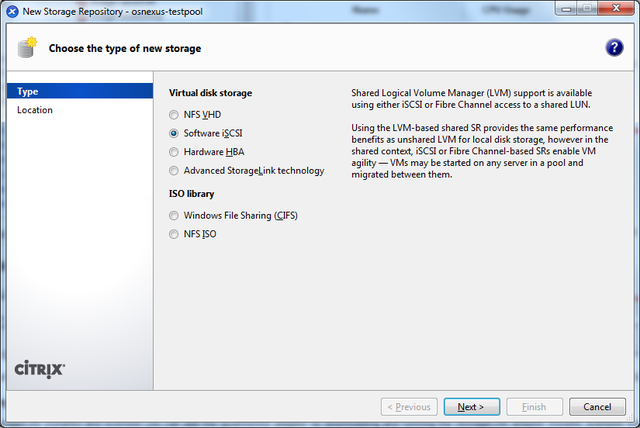

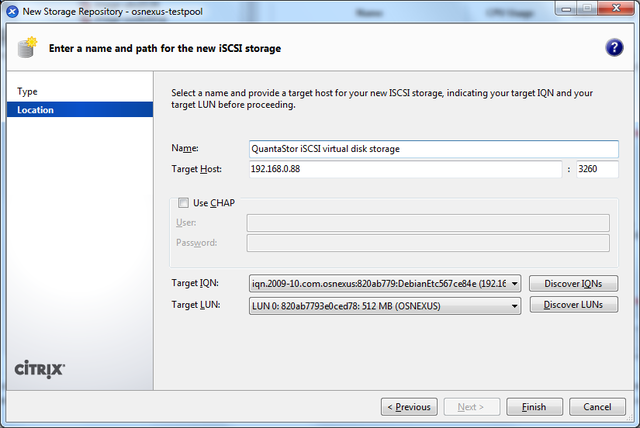

This picture shows the iSCSI Software SR type selected.

This screen captures the main page of the wizard. Enter the IP address of the QuantaStor storage system and then press the Discover Targets and Discover LUNs button to find the available devices. Once selected, press OK to complete the mapping.

Optimal XenServer iSCSI timeout settings

Since the virtual machines on the XenServer will be using the iSCSI SR for their boot device, it is important to change the timeout intervals so that the iSCSI layer has several chances to try to re-establish a session and so that commands are not quickly requeued to the SCSI layer. This is especially important in environments with potential for high latency.

Per the README from open-iSCSI, we recommend the following settings in the iscsid.conf file for a XenServer.

- Turn off iSCSI pings by setting:

- node.conn[0].timeo.noop_out_interval = 0

- node.conn[0].timeo.noop_out_timeout = 0

- Set the replacement_timer to a very long value:

- node.session.timeo.replacement_timeout = 86400

Installing the XenServer multipath configuration settings

XenServer utilizes the Linux device-mapper multipath driver (dmmp) to enable support for hardware multi-pathing. The dmmp driver has a configuration file called multipath.conf that contains the multipath mode and failover rules for each vendor. There are some special rules that need to be added to that file for QuantaStor as well. We're working closely with Citrix to get this integrated so that everything is pre-installed with their next product release but until then, the following changes will need to be implemented. You'll need to edit the /etc/multipath-enabled.conf file located on each of your XenServer dom0 nodes. In that file, just find the last device { } section and add this additional one for QuantaStor:

device {

vendor "OSNEXUS"

product "QUANTASTOR"

getuid_callout "/sbin/scsi_id -g -u -s /block/%n"

path_selector "round-robin 0"

path_grouping_policy multibus

failback immediate

rr_weight uniform

rr_min_io 100

path_checker tur

}

- A correctly configured multipath-enabled.conf file including the above changes can be downloaded from our website: multipath-enabled.conf

- Using the wget command the file can be downloaded directly from your Xen Server. We suggest you save a copy of your original file.

XenServer StorageLink Storage Repository Setup & Configuration

The following notes on StorageLink support only apply to XenServer version 6.x. Starting with version 2.7 QuantaStor now has an adapter to work with the StorageLink features that have been integrated with XenServer.

Installing the QuantaStor Adapter for Citrix StorageLink

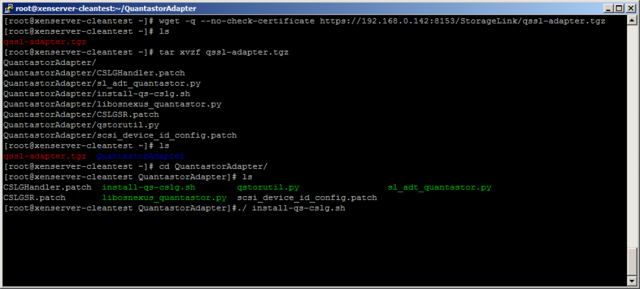

To install the adapter takes just a few simple steps. First, get the files from the QuantaStor box. To do this you can use the command below with the ip address of your QuantaStor system:

wget -q --no-check-certificate https://<quantastor-ip-address>:8153/StorageLink/qssl-adapter.tgz

You can also get the file by downloading it using the following command. This is recommended if you're using bonding as this version includes a fix for that. (Note: QuantaStor v3.6 will include the updated StorageLink tar file, so the above command will work for that version)

wget http://packages.osnexus.com/patch/qssl-adapter.tgz

Next, unpack the .tgz file returned by running the command:

tar zxvf qssl-adapter.tgz

After the file is unpacked, cd into the directory.

cd QuantastorAdapter/

Now you can run the installer file. This should copy the adapter files to the correct directories, along with patching a few minor changes to the StorageLink files. You can run the installer with the command:

./install-qs-cslg.sh

NOTE: These steps must be done for all of the Xenserver hosts that are in the pool.

QuantaStor Utility Script

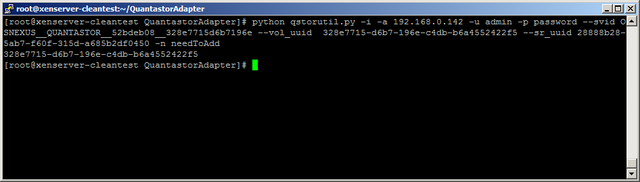

The installer also comes with a file named qstorutil.py. This file can be executed with specific command line arguments to discover volumes, create storage repositories, and introduce preexisting volumes. The file is also copied to “/usr/bin/qstorutil.py” during the installation of the QuantaStor adapter for easier access.

The script has a help page with examples that can be accessed via: qstorutil.py --help

While the script is the easiest way to create storage repositories or introduce volumes, the adapter will work just fine using the xe commands. We provide the qstorutil.py to make the configuration simpler due to the large number of somewhat complex arguments required by xe.

Creating XenServer Storage Repositories (Srs)

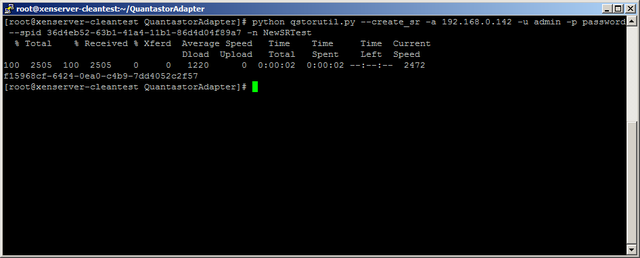

The easiest way to create a XenServer storage repository is to use the QuantaStor utility script. To do this you can run either of the commands below:

qstorutil.py --create_sr -a <quantastor-ip-address> -u admin -p password --spid <quantastor-storage-pool-uuid> -n <sr-name>

qstorutil.py --create_sr -a <quantastor-ip-address> -u admin -p password --sp_name <quantastor-storage-pool-name> -n <sr-name>

Both of these commands will create a storage repository. One takes the QuantaStor spid where as the other takes the QuantaStor storage pool name (and uses it to figure out the spid). When you create a storage repository, a small metadata volume is created. The metadata volume name with have the prefix “SL_XenStorage__” and then a guid.

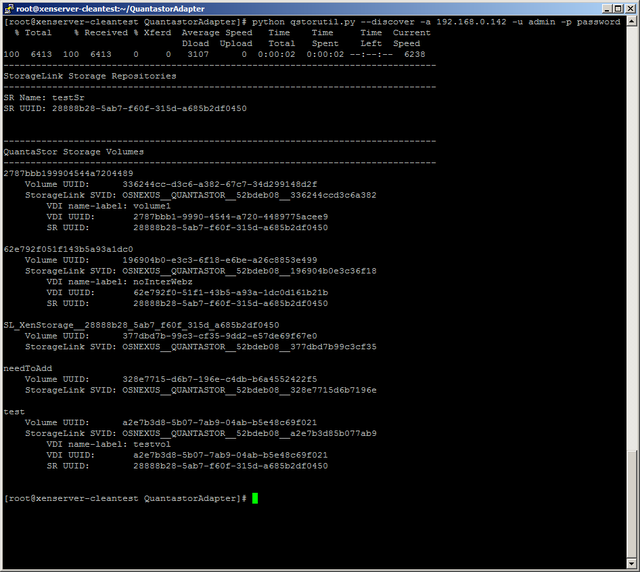

Storage Volume Discovery

Storage volume discovery will show you a list of all the volumes on the QuantaStor box specified (via ip address). The list will specify the name of the volume, the QuantaStor id of the volume, the StorageLink id of the volume, the storage system name, and the storage system id. If the volume is in a storage repository on the local XenServer system it will also have three additional fields. These fields are the VDI name, the VDI uuid, and the storage repository uuid.

In addition to listing all of the volumes, the discover command will also print out the storage repositories on the local XenServer system. The fields listed are the storage repository name, and the storage repository uuid.

To call the discovery command you can use the command below:

qstorutil.py --discover -a <quantastor-ip-address> -u admin -p password

Introducing a Preexisting Volume to XenServer

To introduce a preexisting volume to XenServer you should first run the storage volume discovery command. To introduce a volume run one of the three commands below:

qstorutil.py --vdi_introduce -a <quantastor-ip-address> -u admin -p password --vol_uuid <quantastor-volume-uuid> --sr_uuid <storage-repository-uuid> -n <vol-name>

qstorutil.py --vdi_introduce -a <quantastor-ip-address> -u admin -p password --svid <storagelink-volume-id> --sr_uuid <storage-repository-uuid> -n <vol-name>

qstorutil.py --vdi_introduce --svid <storagelink-volume-id> --vol_uuid <quantastor-volume-uuid> --sr_uuid <storage-repository-uuid> -n <vol-name>

Each of these commands do the exact same thing, they just require slightly different parameters. Use whichever command works best for you. The reason for running the discover command before introducing a volume is that the volume vol_uuid, svid, and sr_uuid are all provided in the discover.

Virtual Storage Appliance iSCSI Configuration

With the rapid increase in storage density and compute power the whole role of a storage system is becoming just another workload, and this is what QuantaStor is ultimately focused on enabling with our Software Defined Storage platform. This section outlines how to setup QuantaStor as a virtual machine a.k.a. Virtual Storage Appliance (VSA) so that you can deliver dedicated virtual appliances to your internal customers. VSAs can get their storage from DAS in the server where the VM is running or via NAS or SAN storage. In this section we'll go over how to configure a VSA to get storage over iSCSI which it can then utilize to create a pool of storage for sharing out over SAN/NAS protocols.

Configuring Your SAN for the VSA

You can use SAN storage from any physical storage system (QuantaStor, EqualLogic, etc) with your VSA and the configuration steps will vary according to the vendor. Also, for the purposes of this article we'll call the physical storage appliance (PSA) that's delivering storage to the VSA a physical storage appliance or PSA for short.

Configuration Summary

Assuming you're using QuantaStor for your PSA you'll need to create a Storage Volume, add a Host entry for the VSA and then assign the storage to the IQN of the VSA. Within the VSA we'll also need to setup the iSCSI initiator software, configure it, then set it up to automatically login to your QuantaStor PSA at boot time.

Installing the iSCSI Initiator service on the VSA

We're assuming that you have a VSA setup and booted at this point but have not yet created any storage pools because there's no storage yet delivered from the PSA to the VSA. So the first step is to install the open-iscsi initiator tools like so:

sudo apt-get install open-iscsi

Next you'll need to check to see what the IQN is for your VSA by running this command:

more /etc/iscsi/initiatorname.iscsi

The IQN will look something like this:

InitiatorName=iqn.1993-08.org.debian:01:5463d5881ec2

You can use that IQN as-is or modify this to make an IQN that better identifies your VSA system if you like but this is optional. For this example we'll use this as the VSA's initiator IQN:

InitiatorName=iqn.2012-01.com.quantastor:qstorvsa001

Adding a Host entry for the VSA into the PSA

The IQN we set for the VSA is 'iqn.2012-01.com.quantastor:qstorvsa001' in this example and we'll need to add this to a new host entry in the PSA so that we can assign storage to the VSA from the PSA. In your QuantaStor system just select the Hosts section then right-click, choose 'Add Host' and add host with its IQN. Once the Host entry has been added for the VSA you'll need to assign a storage volume to it. We'll next use the iSCSI initiator on the VSA to login to the PSA so that the VSA can consume that storage volume.

If you're having trouble getting the PSA configured remember that within the QuantaStor web management screen there's a 'System Checklist' button in the toolbar that will walk you through all of these configuration steps.

Logging into the PSA target storage volume from the VSA

Now that the tools are installed, you've created a Storage Volume in the PSA, and assigned it to the VSA host entry in the PSA you can now login to access that storage. The easy way to do this is to run this command but substitute the IP address with the IP address of the PSA:

iscsiadm -m discovery -t st -p 192.168.0.137

This will have output that looks something like this:

192.168.0.137:3260,1 iqn.2009-10.com.osnexus:cd4c0fb9-bddfb4774939e62a:volume0

To login to all the volumes you've assigned to the VSA you can run this simple command:

iscsiadm -m discovery -t st -p 192.168.0.137 --login

The output from that command looks like this:

192.168.0.137:3260,1 iqn.2009-10.com.osnexus:cd4c0fb9-bddfb4774939e62a:volume0 Logging in to [iface: default, target: iqn.2009-10.com.osnexus:cd4c0fb9-bddfb4774939e62a:volume0, portal: 192.168.0.137,3260] Login to [iface: default, target: iqn.2009-10.com.osnexus:cd4c0fb9-bddfb4774939e62a:volume0, portal: 192.168.0.137,3260]: successful

You have now logged into the storage volume on the PSA and your VSA now has additional storage it can access to create storage pools from which it can in turn deliver iSCSI storage volumes, network shares and more.

Verifying that the Storage is Available

To verify that the storage is available you can run this command which will show you that your VSA now has a QuantaStor device attached:

more /proc/scsi/scsi

Attached devices: Host: scsi1 Channel: 00 Id: 00 Lun: 00 Vendor: VBOX Model: CD-ROM Rev: 1.0 Type: CD-ROM ANSI SCSI revision: 05 Host: scsi4 Channel: 00 Id: 00 Lun: 00 Vendor: OSNEXUS Model: QUANTASTOR Rev: 300 Type: Direct-Access ANSI SCSI revision: 06

Importing the Storage into QuantaStor

Now that the storage has arrived to the appliance you'll need to use the 'Scan for Disks...' dialog within your QuantaStor VSA to make the storage appear. Once it appears you can create a storage pool out of it and start using it.

Persistent Logins / Automatic iSCSI Login after Reboot

Last but not least you'll need to setup your VSA so that it connects to the PSA automatically at system startup. The easist way to do this is to edit the /etc/iscsi/iscsid.conf file to enable automatic logins. The default is 'manual' so you'll need to change that to 'automatic' so that it looks like this:

#***************** # Startup settings #***************** # To request that the iscsi initd scripts startup a session set to "automatic". node.startup = automatic

Alternatively you could script a line in your /etc/rc.local file to login just as you did above to initially setup the VSA:

iscsiadm -m discovery -t st -p 192.168.0.137 --login

XenServer NIC Optimization

For those using XenServer you may see a nice boost in performance by replacing the default Realtek driver with the E1000 driver which has 1GbE support. Have a look at this article over at www.netservers.co.uk which outlines the hack.

Troubleshooting

If you had problems with the above you might try restarting the iSCSI initiator service with 'service iscsi-network-interface restart'. Do not restart the 'iscsi-target' service as that's the service that's serving targets from your VSA out to the hosts consuming the VSA storage. Note also that if you restart the 'iscsi-target' service by mistake you'll need to restart the 'quantastor' service to re-expose the iSCSI targets for that system.