Template:CephManagementOperations

All key setup and configuration options are completely configurable via the WebUI. Operations can also be automated using the QuantaStor REST API and CLI. Custom Ceph configuration settings can also be done at the console/SSH for special configurations, custom CRUSH map settings; for these scenarios we recommend checking with OSNEXUS support or pre-sales engineering for advice before making any major changes.

Capacity Planning

One of the great features of QuantaStor's scale-out Ceph based storage is that it is easy to expand by adding more storage to existing systems or by adding more systems into the cluster.

Expanding by adding more Systems

Note that it is not required to use the same hardware and same drives when you expand but it is recommended that the hardware be a comparable configuration so that the re-balancing of data is done evenly. Expanding can be done one system at a time and the OSDs for the new system should be roughly the same size as the OSDs on the other systems.

Expanding by adding storage to existing Systems

If you add more OSDs to existing systems then be sure to expand multiple or all systems with the same number of new OSDs so that the re-balancing can work efficiently. If your pools are setup with a replica count of 2x then at minimum a pair of systems with additional OSDs at a time.

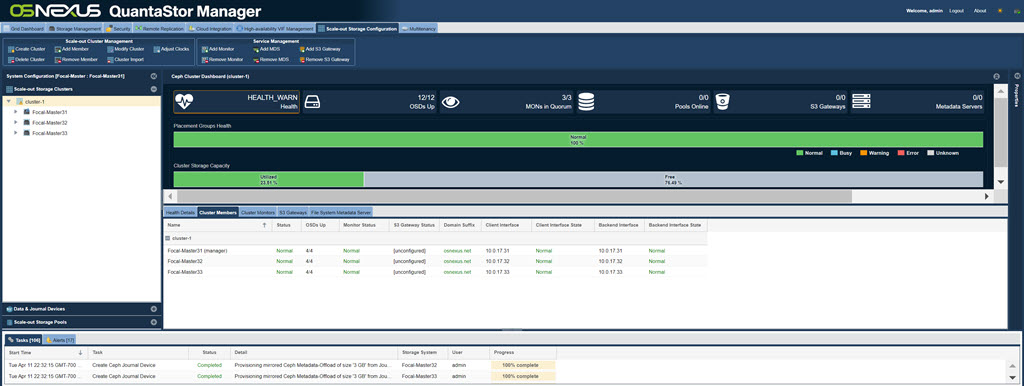

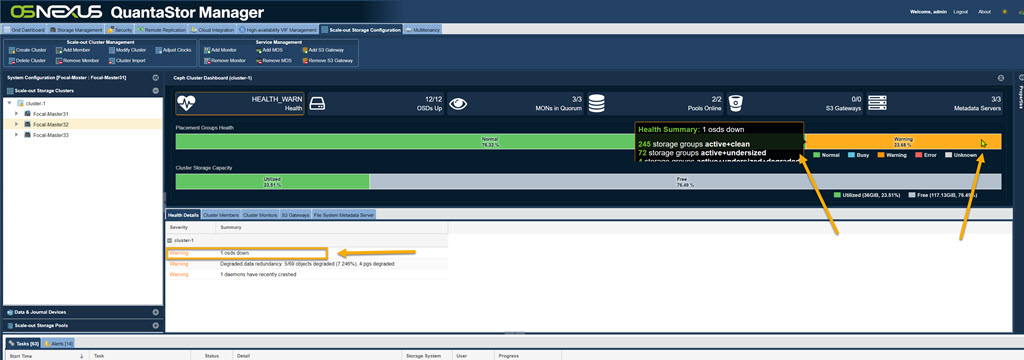

Understanding the Cluster Health Dashboard

The cluster health dashboard has two bars, one to show how much space is used and available, the second shows the overall health of the cluster. The health bar represents the combined health of all the "placement groups" for all pools in the cluster.

If a node goes offline or a system is impacted such that the OSDs become unavailable this will cause the health bar to show some orange and/or red segments. Hover the mouse over the effected section to get more detail about the OSDs that are impacted.

Additional detail is also available if the OSD section has been selected. If you've setup the cluster with OSDs that are using hardware RAID then your cluster will have an extra level of resiliency as disk drive failures will be handled completely within the hardware RAID controller and will not impact the cluster state.

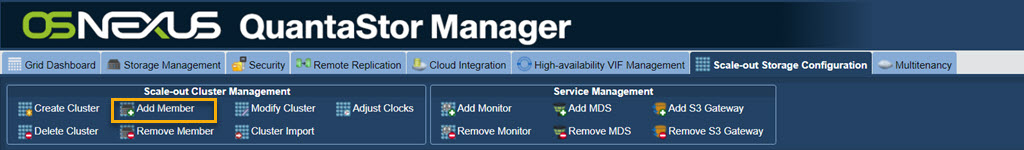

Adding a Node to a Ceph Cluster

Additional Systems can be added to the Ceph Cluster at any time. The same hardware requirements apply and the System will need to have appropriate networking (connections to both Client and Backend networks).

To add an additional System to the Ceph Cluster:

- First add the System to the QuantaStor Grid

- Select Scale-out Storage Configuration --> Scale-out Storage Clusters --> Scale-out Cluster Management --> Add Member

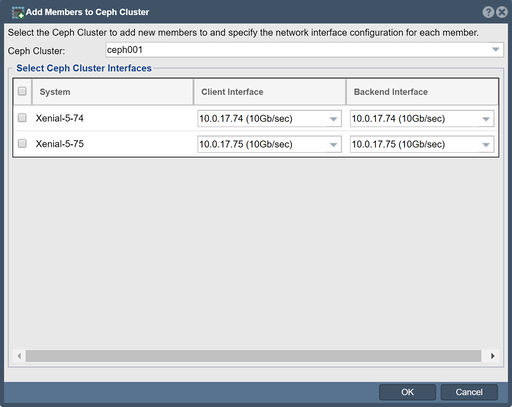

The Add Member to Ceph Cluster dialogue box will pop-up:

- Ceph Cluster: If there are multiple Ceph Clusters in the grid, select the appropriate cluster for the new member to join

- Storage System: Select the System to attach to the Ceph Cluster

- Client & Backend Interface: QuantaStor will attempt to select these interfaces appropriately based on their IP addresses. Verify that the correct interfaces are assigned. If the interfaces do not appear, ensure that valid IP addresses have been assigned for the Client and Back-end Networks and that all physical cabling is connected correctly.

- Enable Object Store for this Ceph Cluster Member: Leave this unchecked if using as Scale-out SAN/Block Storage solution. Check this if using Object storage.

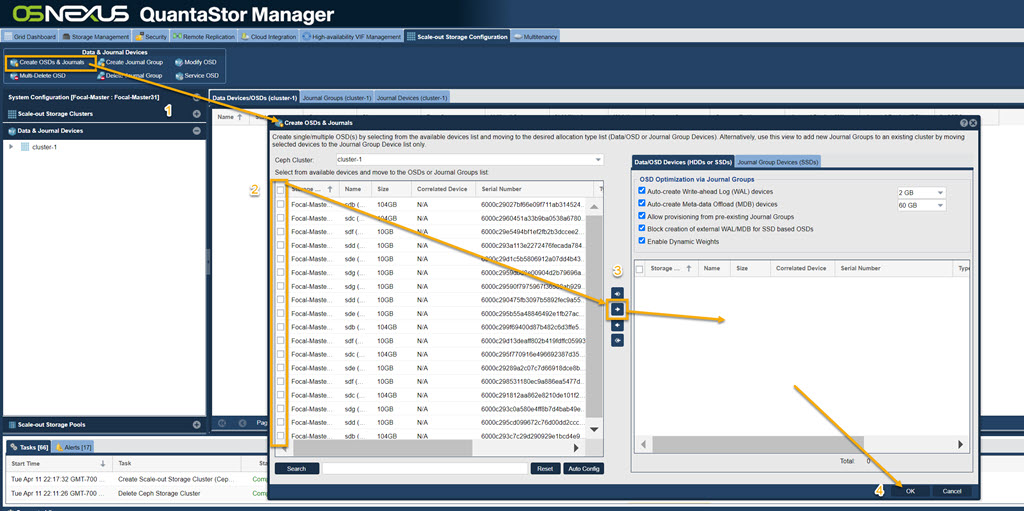

Adding OSDs to a Ceph Cluster

OSDs can be added to a Ceph Cluster at any time. Simply add more disk devices to existing nodes or add a new member to the Ceph Cluster which has unused storage. The new devices can be added as OSDs using the Create OSDs & Journals dialog as done previously. The process can be done by simply pressing the Auto Config button which will optimize the process.

Note that if you are not adding additional SSD devices to be used as journal devices for the new OSDs that you must check the option to Allow provisioning from pre-existing Journal Groups which will use existing unused journal partitions for the newly create OSDs.

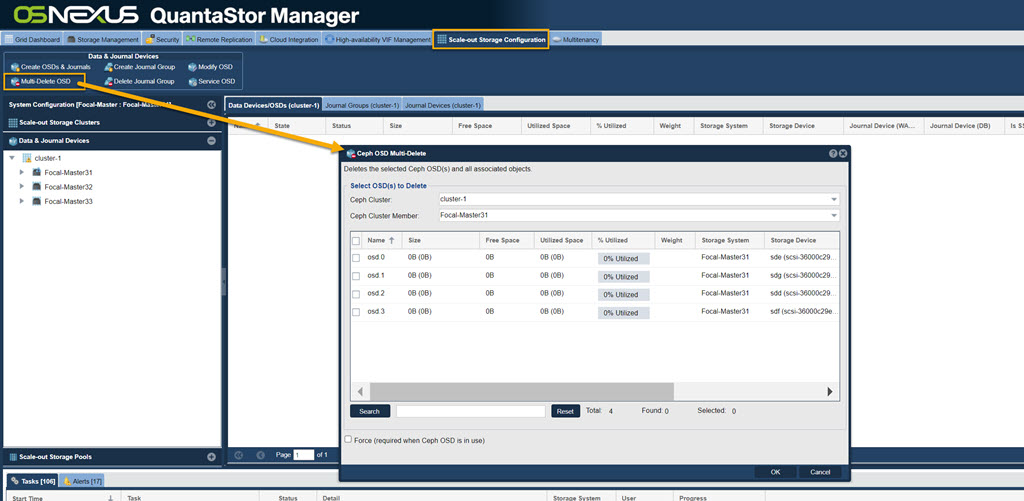

Removing OSDs from a Cluster

OSDs can be removed from the cluster at any time and the data stored on them will be rebuilt and re-balanced across the remaining OSDs. Key things to consider include:

- Make sure there is adequate space available in the remaining OSDs in the cluster. If you have 30x OSDs and you're removing 5x OSDs then the used capacity will increase by roughly 5/25 or 20%. If there isn't that much room available be sure to expand the cluster first, then retire/remove old OSDs.

- If there is a large amount of data in the OSDs it is best to re-weight the OSD gradually to 0 rather than abruptly removing it.

- In multi-site configurations especially, make sure that the removal of the OSD doesn't put pools into a state where there are not enough copies of the data to continue read/write access to the storage. Ideally OSDs should be removed after re-weighting and subsequent re-balancing has completed.

- Select the Data & Journal Devices menu and click on Multi-Delete OSD in the ribbon bar. From here you can checkmark the OSDs that you wish to delete. Once all the desired ones are selected press OK and it'll begin the deletion process.

- Deletion will take time, depending on how much data needs to be migrated to other OSDs in the Cluster

Adding/Removing Monitors in a Cluster

For Ceph Clusters up to 10x to 16x systems the default 3x monitors is typically fine. The initial monitors are created automatically when the cluster is formed. Beyond the initial 3x monitors it may be good to jump up to 5x monitors for additional fault-tolerance depending on what the cluster failure domains look like. If you cluster is spanning racks, it is best to have a monitor in each rack rather than having all the monitors in the same rack which will cause the storage to be inaccessible in the event of a rack power outage. Adding/Removing Monitors is done by using the buttons of the same names in the toolbar.