Cloudera(tm) Hadoop Integration Guide

This integration guide is focused on configuring your QuantaStor storage grid as Cloudera® Hadoop™ data cluster for better Hadoop performance with less hardware. Note that you can still use your QuantaStor system normally as SAN/NAS system with the Hadoop services installed.

Setting up Cloudera® Hadoop™ within your QuantaStor® storage system is very similar to the steps you would take to setup CDH4 with a standard Ubuntu™ 12.04 / Precise. This is because QuantaStor v3 is built on top of Ubuntu Server.

However, there are some important differences and this guide covers all that. Also note that if you're following instructions from the Cloudera web site for steps outside of this How-To be sure refer to the sections regarding Ubuntu Server v12.04/Precise.

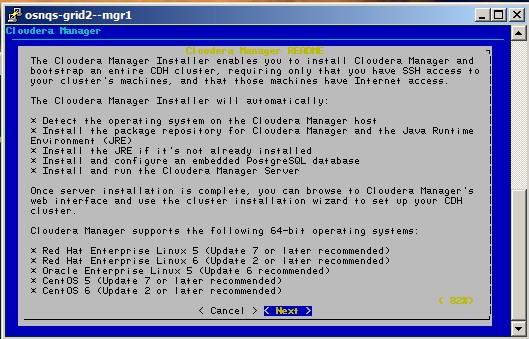

The Hadoop installation procedure begins by invoking an installation script, which is included with the current version of QuantaStor® called hadoop-install. From there the CDH installation proceeds in stages and is illustrated below in a series of screen shots. Note that the illustrated example consists of a cluster of four QuantaStor® nodes, one of which is designated the "Hadoop Manager" node.

Note also that on the Hadoop Manager node, the Hadoop management functionality will impose additional resource costs on the system. For example, when testing the installation using QuantaStor® nodes based on Virtual Machines, the Manager node required at least 10 GB of memory.

To get started you'll need to login to your QuantaStor v3 storage system(s) using SSH or via the console. Note that all of the commands shown in this How-To guide should be run as root so be sure to run 'sudo -i' to get super-user privileges before you begin.

Last, if you don't yet have a QuantaStor v3 storage system setup, you can get the CD-ROM ISO and a license key here.

Phase 1. Manager Installation Script / Text Mode Configuration Steps

From the superuser command-line on the manager node (hostname "osn-grid2-mgr1" in this example), simply invoke the "hadoop-install" script. This executable is located in the /bin directory, but it is in the path so it can be run from anywhere.

$ hadoop-install

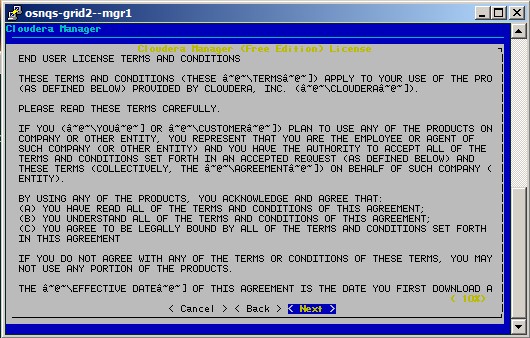

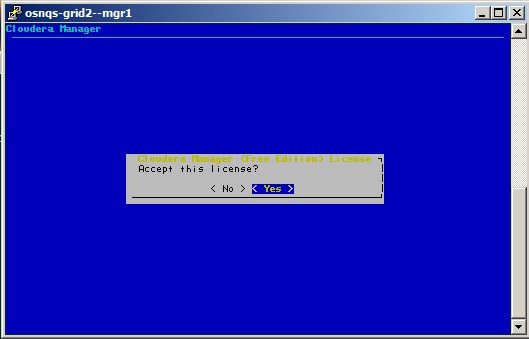

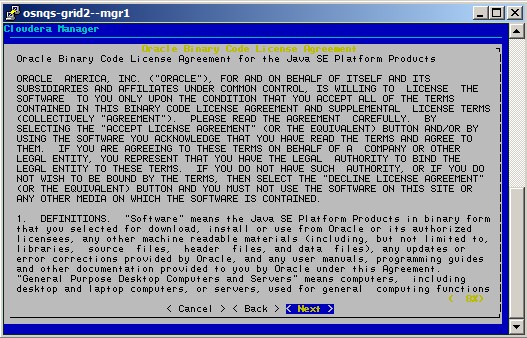

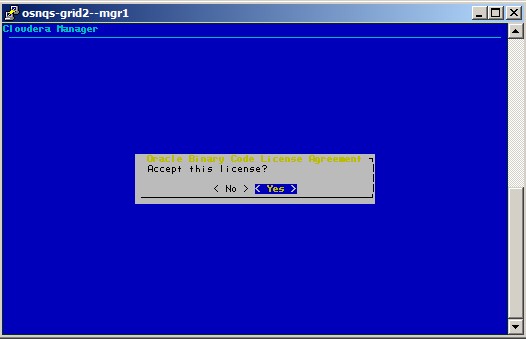

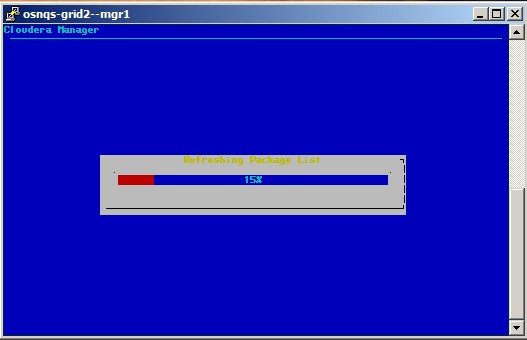

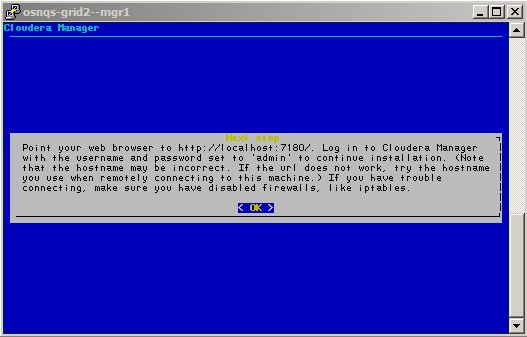

This script will take you through a series of screens, simply accept the EULA dialogs and allow it to proceed. This stage can take 10-15 minutes, and will install the web server for the Hadoop™ Management interface on the Manager node. The script will end with instructions for browsing into that interface to begin the next stage of the installation.

See the following screen shot examples.

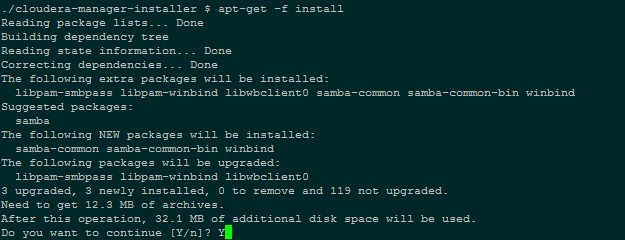

Step 1.1 Package Dependencies / Troubleshooting

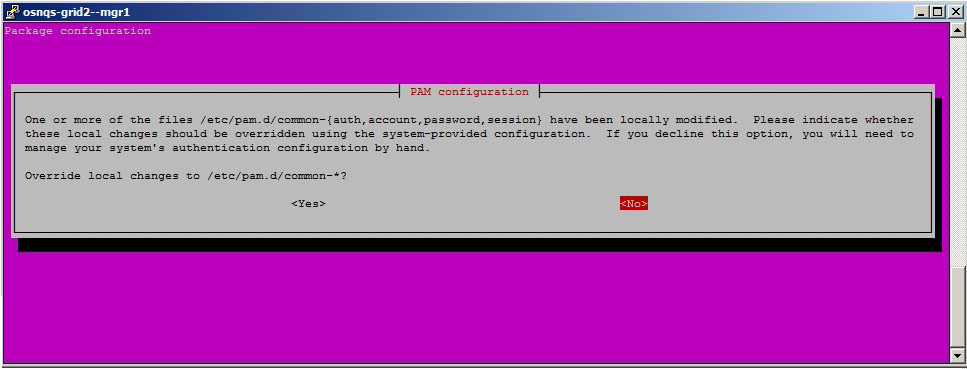

Note that this step includes the installation of a Java package, using the apt-get utility. Successful installation includes the target node meeting the package dependency requirements. If this stage fails, and the log shows package dependency errors: - Return to the command line - Run 'apt-get -f install' - Respond with 'Y' (must be uppercase) and allow this to complete - Accept the PAM configuration modification screen, if offered - Retry the hadoop-install script

See the following two screens as examples:

Phase 2. Initial Web-Based Installation

Follow the instructions seen on the last screen of the previous stage, for example:

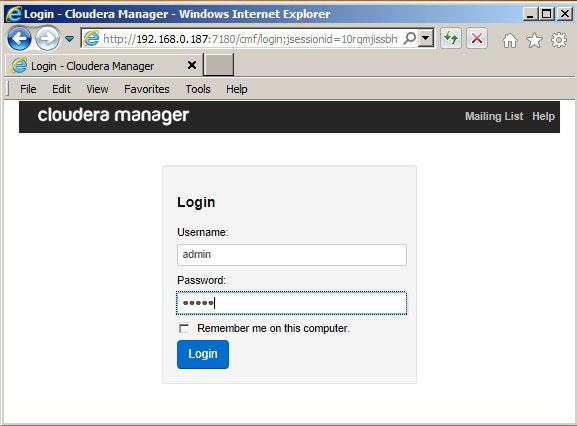

Point your web browser to http://<hostname or IP>:7180/. Log in to the Cloudera Manager with the username and password set to 'admin' to continue installation

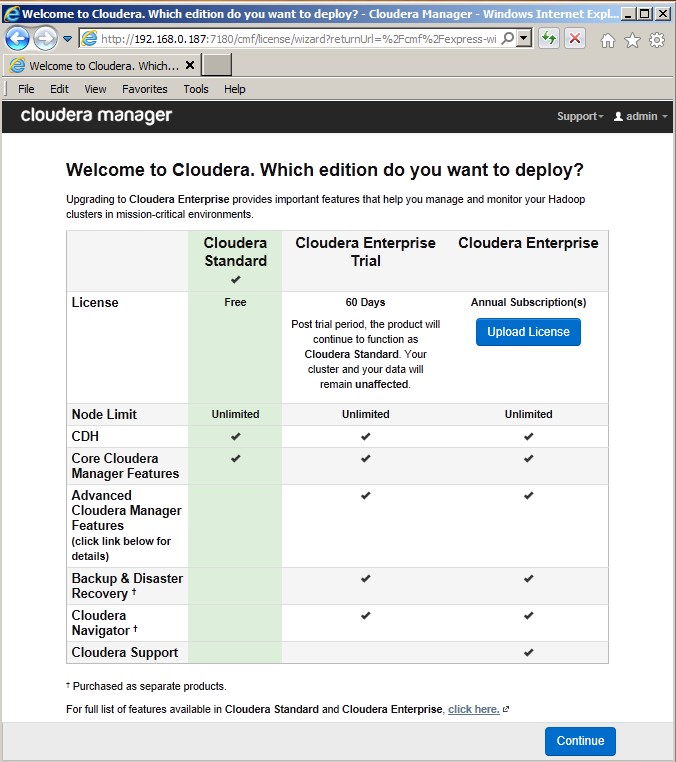

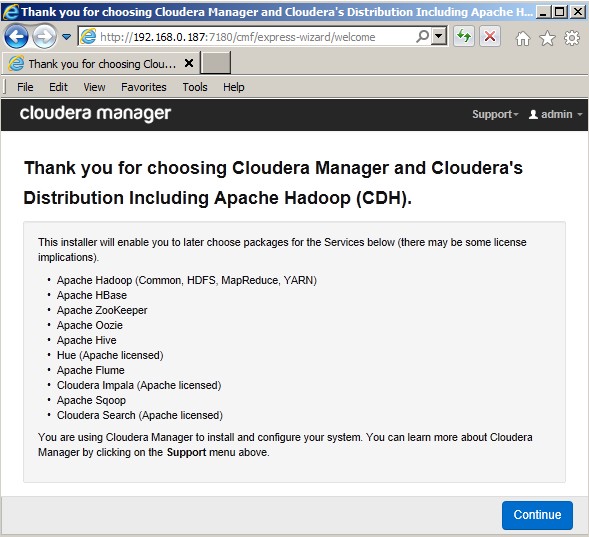

Log into the interface using the 'admin/admin' user/password. You will see the initial screen asking you which edition you wish to install.

For the purposes of this example, the option for a minimal "Standard" edition is shown throughout. On this screen, as on all subsequent screens, hit 'Continue' to move on to the next step.

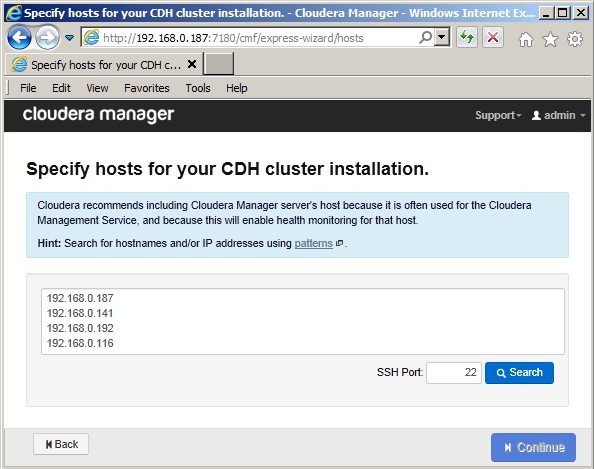

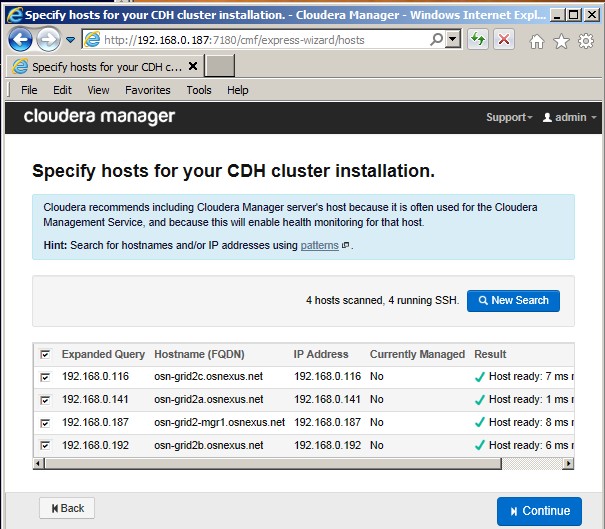

The next screen allows you to enter the host addresses of the nodes onto which Hadoop is to be installed. In this example, four IP addresses are shown, corresponding to the nodes 'osn-grid2-mgr1', 'osn-grid2a', 'osn-grid2b', and 'osn-grid2c'.

By invoking the 'Search' button, the installation will test those node/addresses, and return status which should indicate that the nodes are ready and available for installation. When complete, hit 'Continue'.

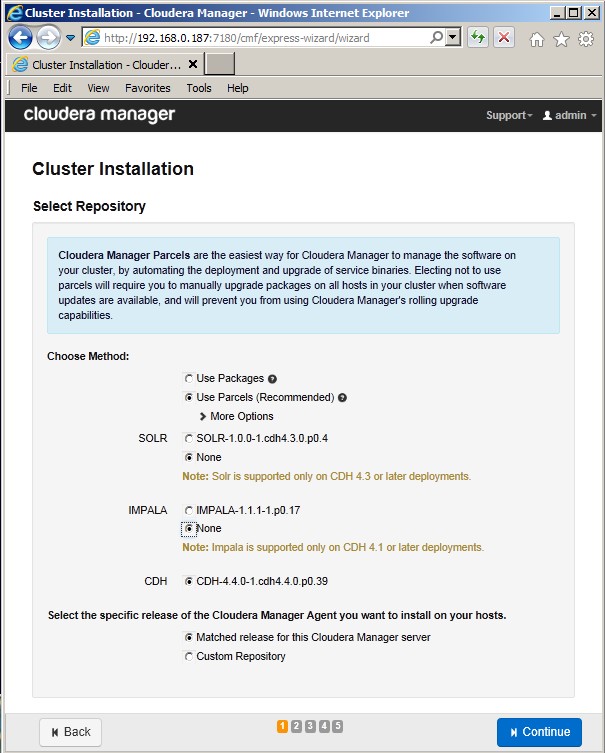

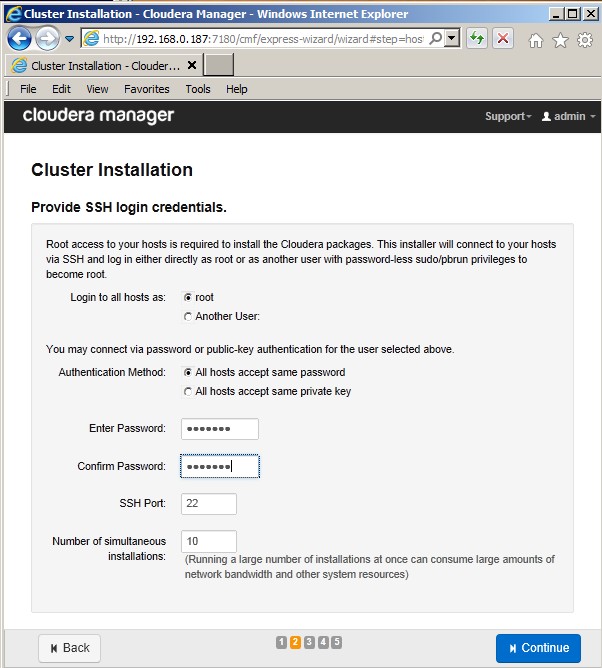

The next screen, "Cluster Installation Screen 1", offers some installation options, again in this example we are installing the bare minimum, where we select the base CDH package only. The screen after that, "Cluster Installation Screen 2", requests login options. In this case, we are using the 'root' user where all nodes have the same root password.

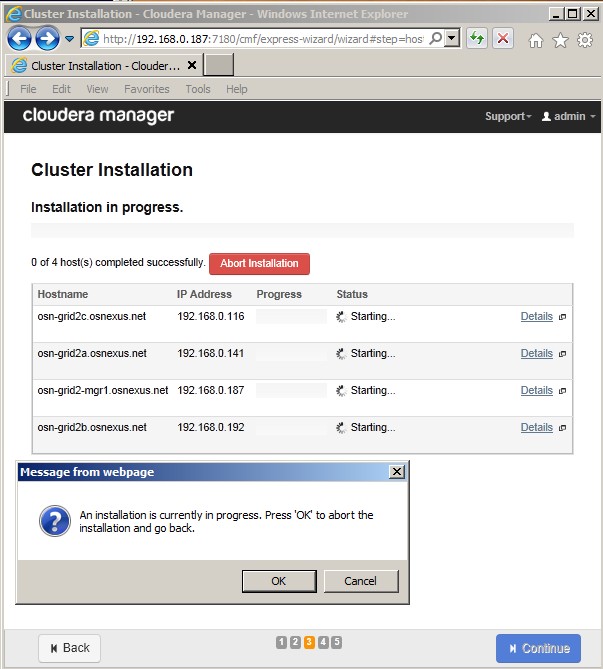

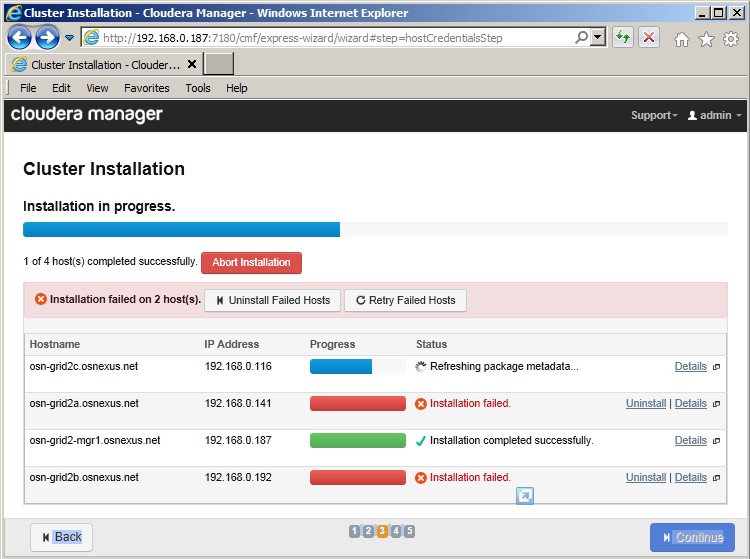

The next screen, "Cluster Installation Screen 3", shows the cluster installation progress.

NOTE: the "Abort" popup dialog, do NOT hit "OK" here, this will abort the installation. Simply get rid of the popup.

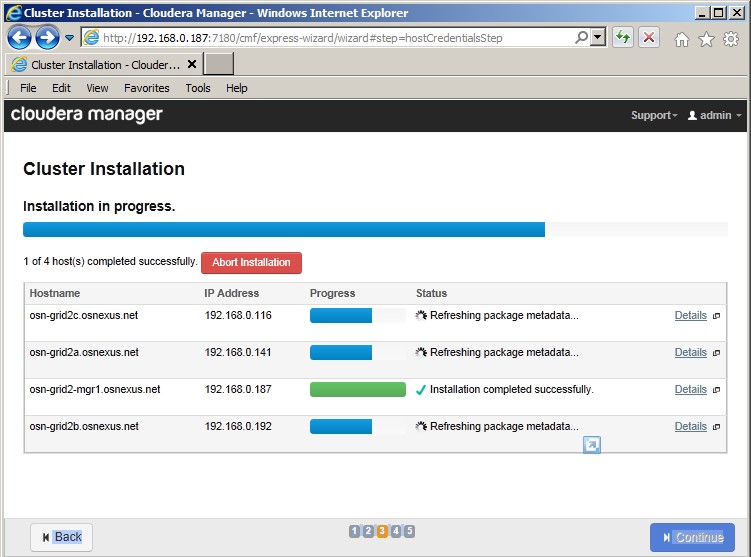

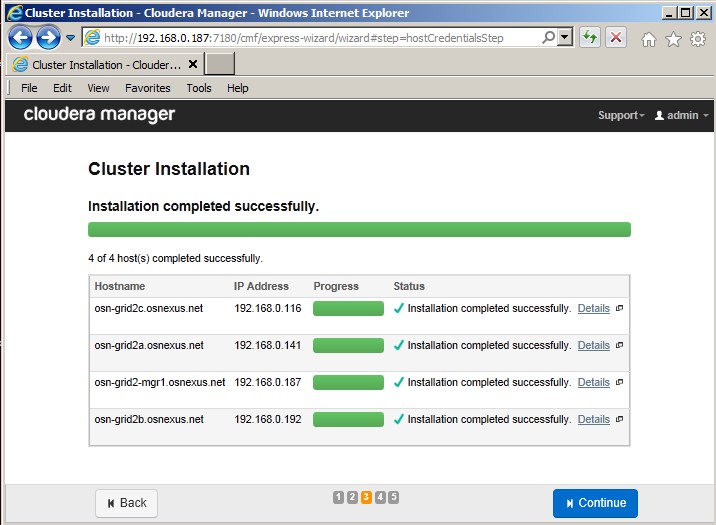

If all goes well, this should progress as shown in the following example figures, resulting in "Installation completed successfully".

Hit 'Continue' to move to the next phase.

Step 2.1. Potential error, package dependency

As in Step 1.1 above, this step includes using the apt-get utility to install a Java package on all the other (besides the manager node) nodes in the cluster, and the same potential for package dependency errors can cause the cluster installation to fail on the non-manager nodes, as shown in the example figure below.

To correct, log into each of the nodes that failed and perform the same steps as shown in Step 1.1. When this is complete, hit the 'Retry Failed Nodes' button on the screen.

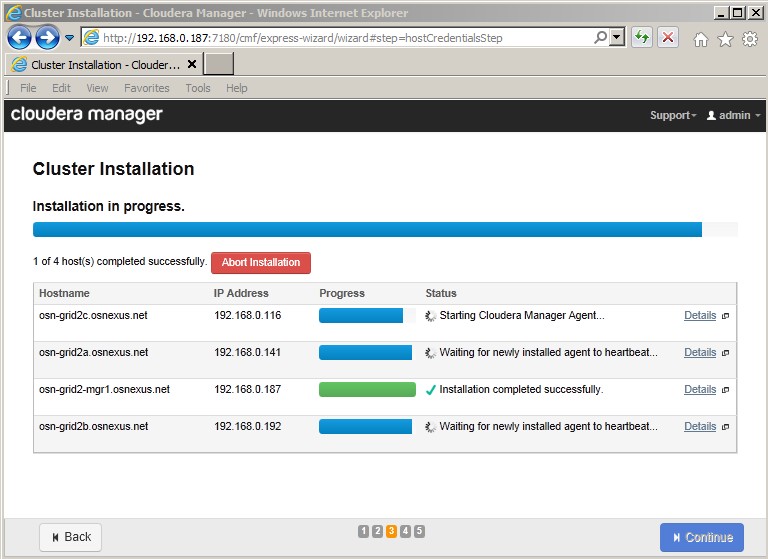

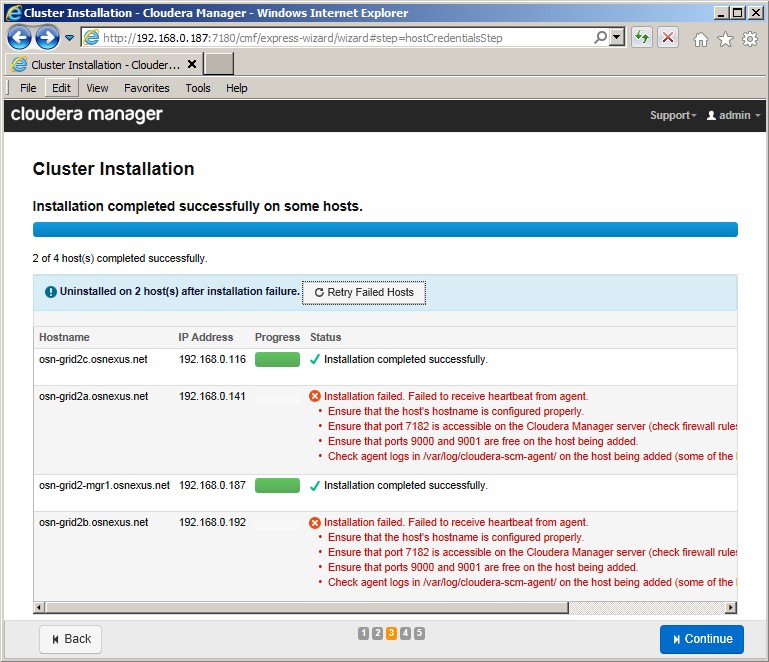

Step 2.2 Potential error, heartbeat detection failure

If installation succeeds past the package installation, the last phase of this step consists of heartbeat tests, as shown in the example figure below.

Sometimes these all succeed on the first try, other times some or all of the nodes fail.

Our testing showed that hitting "Retry Failed Nodes" (sometimes 2-3 times) resulted in heartbeat detection success.

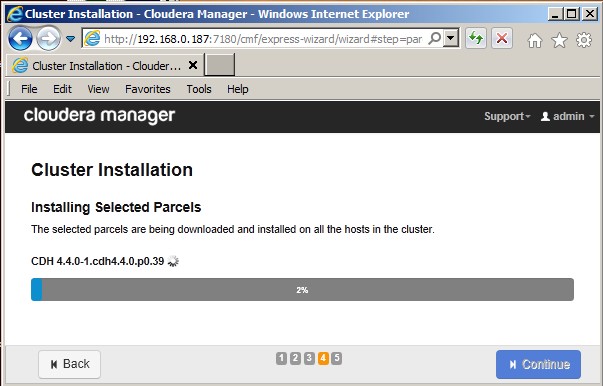

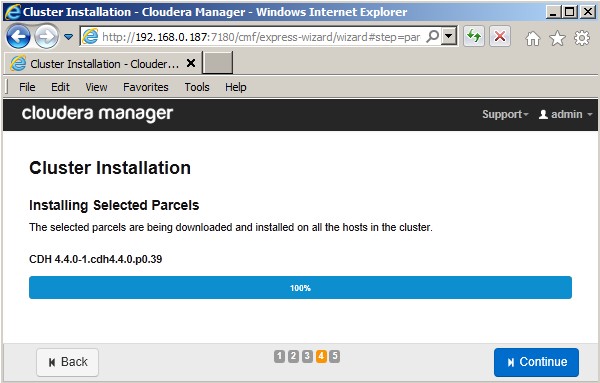

The next screen, "Cluster Installation Screen 4", shows the progress of the "Installing Selected Parcels" stage. This is quite a lengthy phase, taking 1/2 hour to an hour typically.

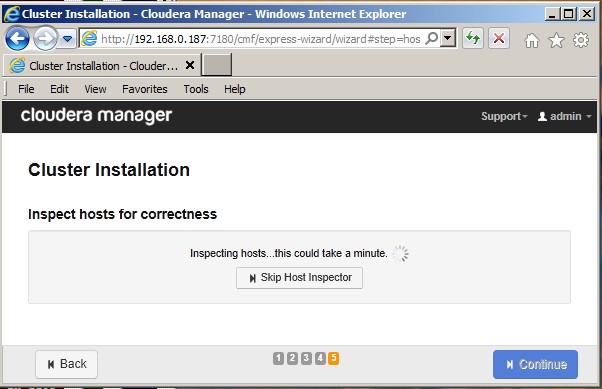

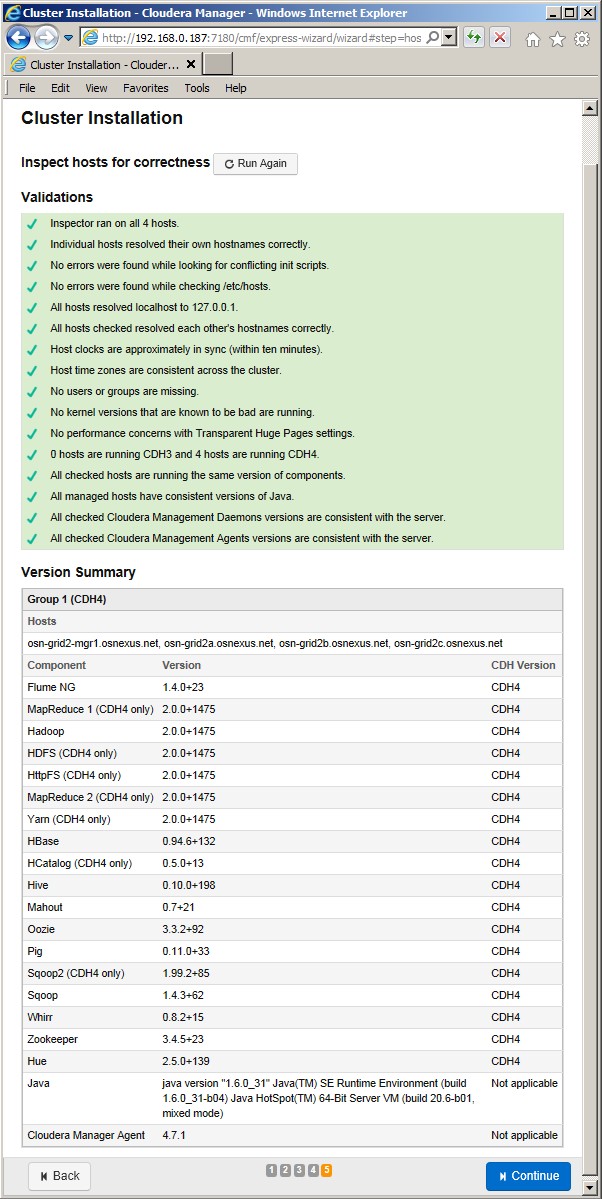

Hitting 'Continue' at the completion of that phase takes you to "Cluster Installation Screen 5", the "Inspect hosts for correctness" phase. This should result in a comprehensive report, as shown in the following example screens.

Phase 3. Web-Based Installation, CDH4 Services

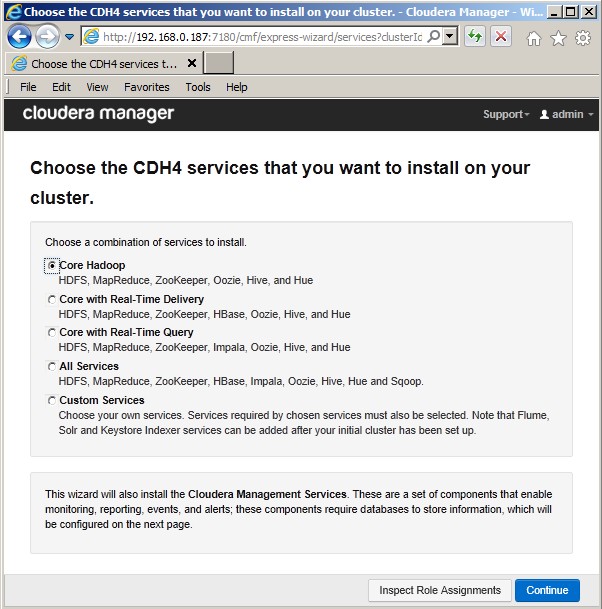

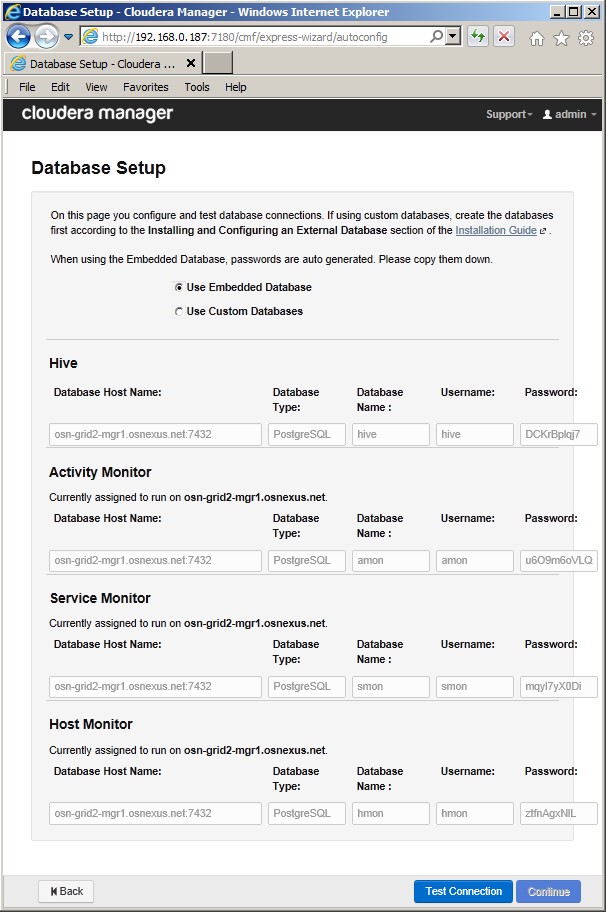

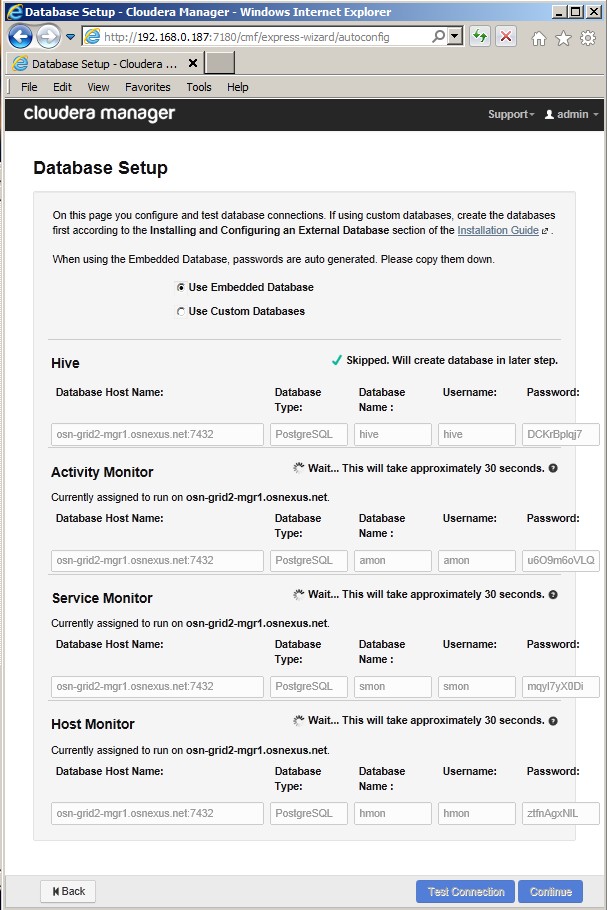

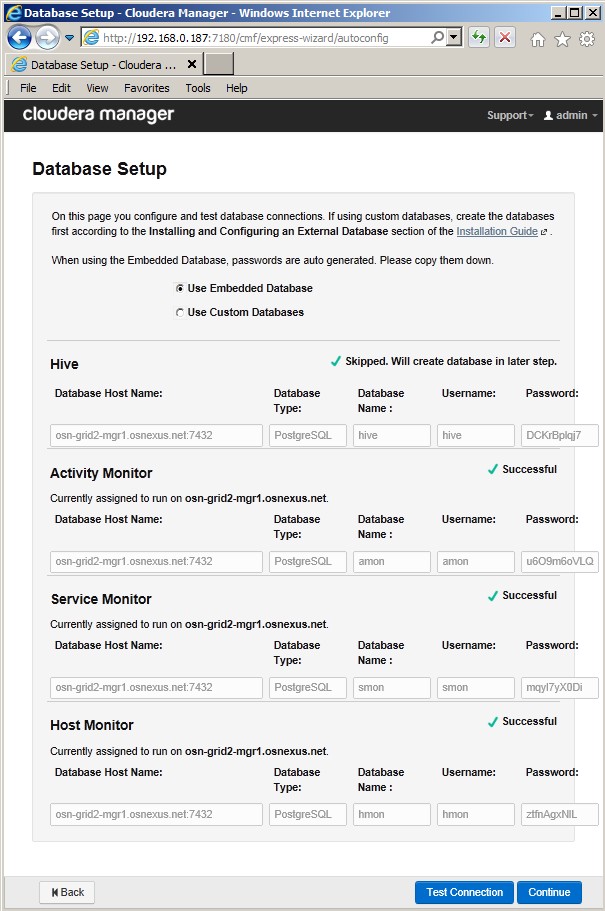

The screen allows you to select the services you wish installed. As before, in this example, we are showing the installation of only the basic core Hadoop service. Immediately following that screen is the screen for Database Setup, here we simple accept the default, hit 'Test Connection", and hit 'Continue' after this returns 'Success' as shown.

Phase 4. Setting Configuration Parameters

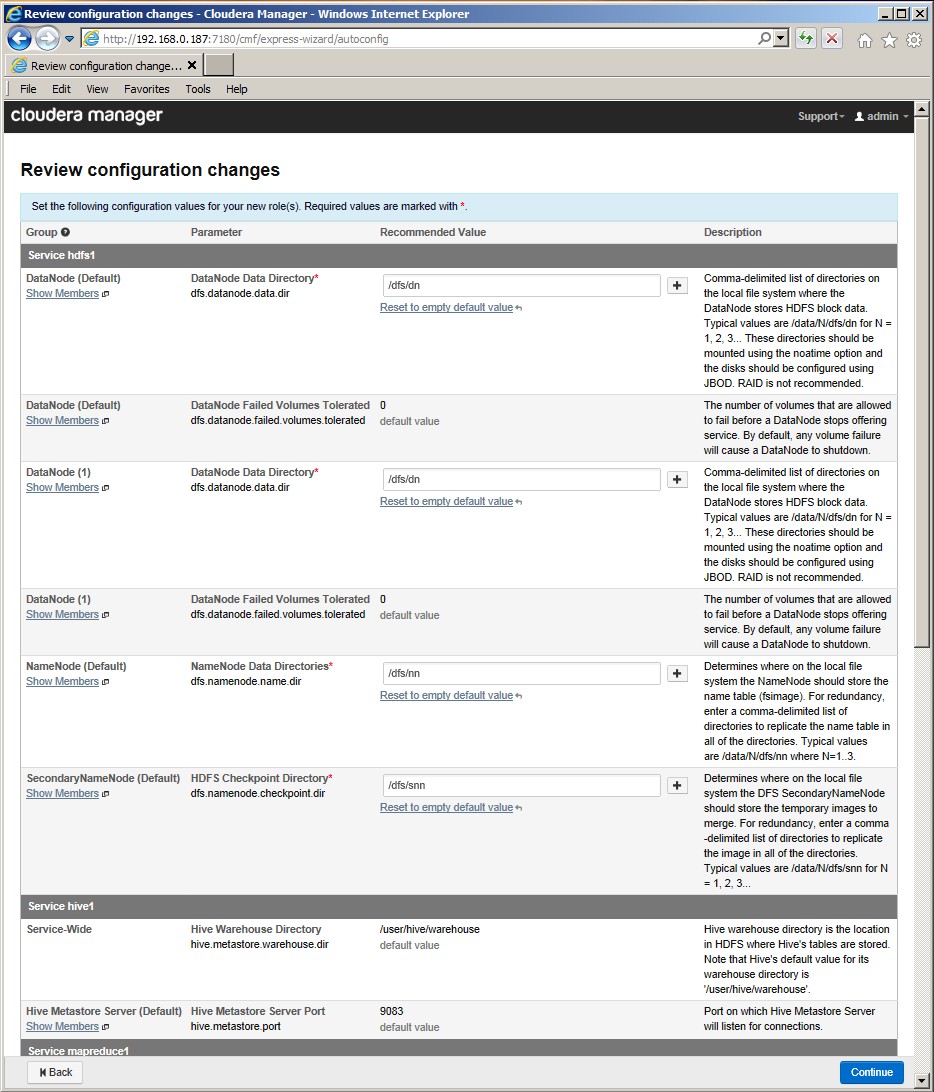

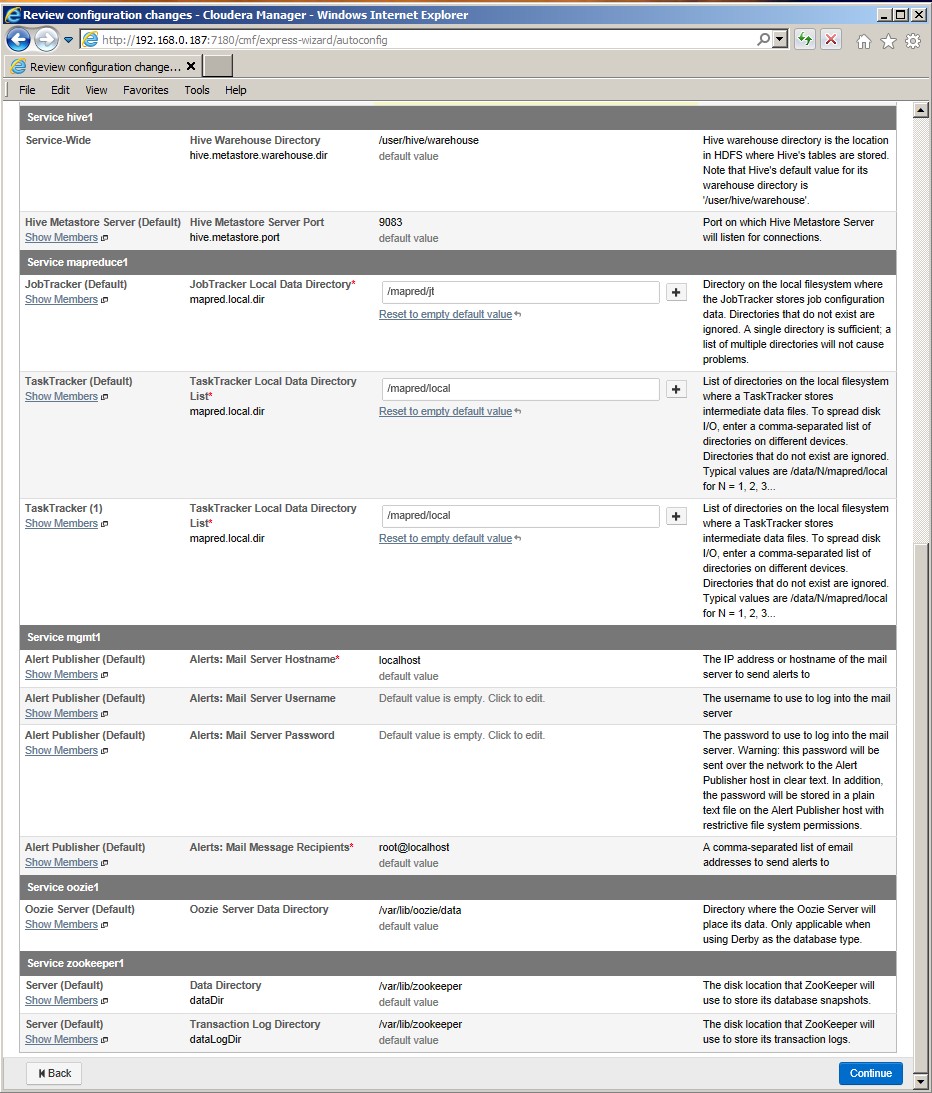

The following screen allows you to change certain configuration parameters. This is a large scrollable screen, shown below in two parts.

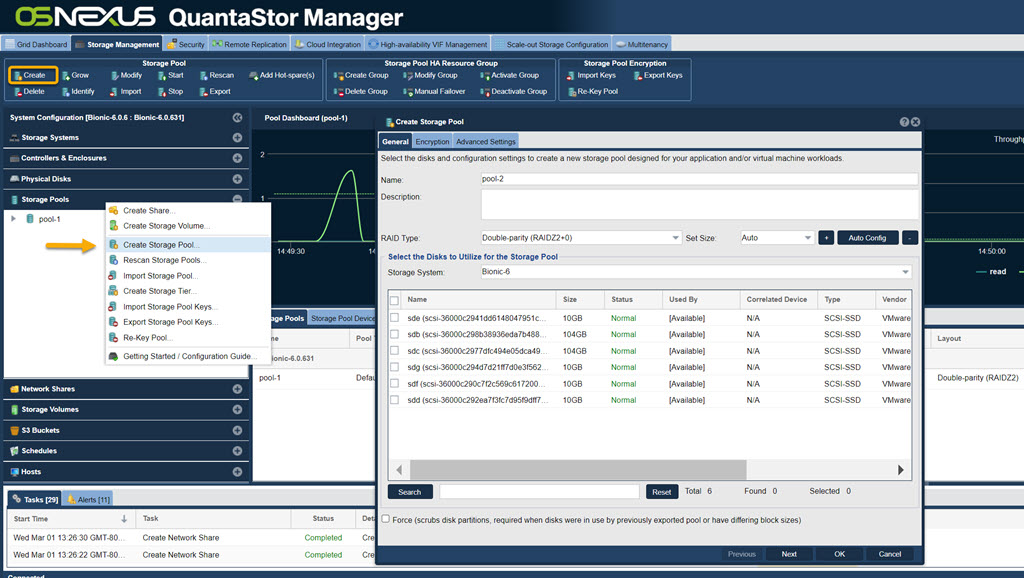

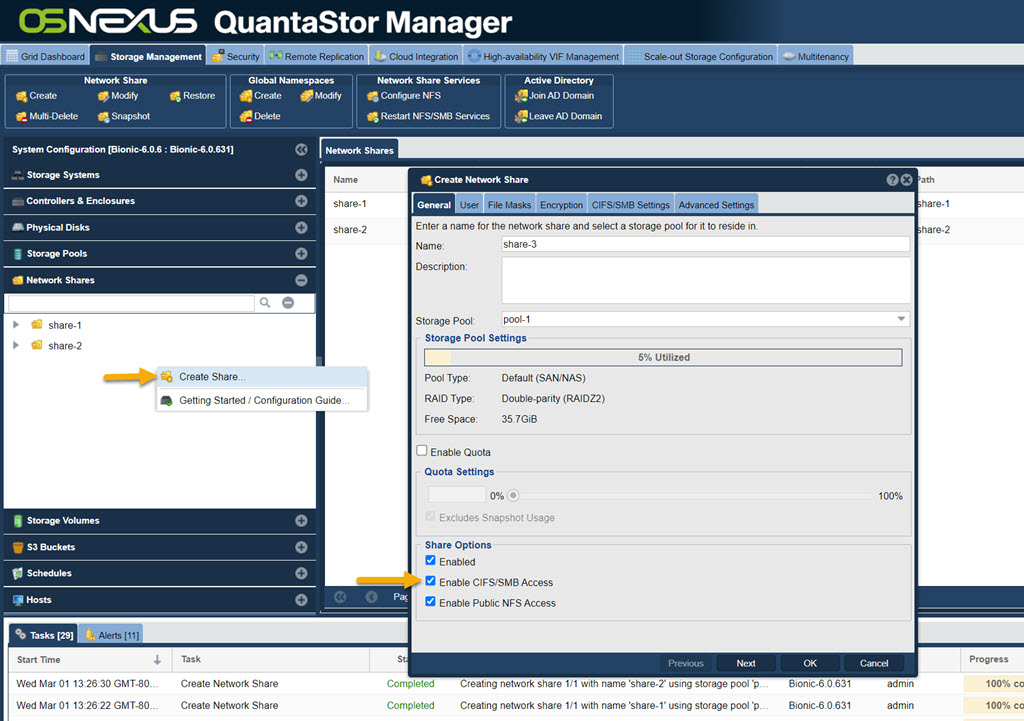

These configuration options bear special attention on QuantaStor installations - to intent here is to specifically setup Hadoop to use the storage on the QuantaStor Storage Pools. By default, the Hadoop installations create top-level directories on the nodes' root filesystem, such as '/dfs' and '/mapred'. So part of the pre-installation setup of the QuantaStor systems includes, on each node in the cluster, the creation of a Storage Pool and a Network Share on that Pool to function as the filesystem root for the Hadoop data store within the Pool.

The following screens show examples of Storage Pool creation and Network Share creation on a QuantaStor node:

Having created Storage Pools, and Network Shares on those pools, the directories where these data destinations should reside would be according to the following examples:

/export/osn-grid2-mgr1-pool1-share1

/export/osn-grid2a-pool1-share1

/export/osn-grid2b-pool1-share1

/export/osn-grid2c-pool1-share1

The Hadoop installation on the QuantaStor nodes must be altered to reflect these changes.

There are two ways this could be accomplished:

1. Change the Hadoop configuration directly. For example, in the "Review configuration changes" screen shown above, the "DataNode (Default)" parameter could be changed from its default value of '/dfs/dn' to '/export/osn-grid2a-pool1-share1/dfs/dn'. And similarly for the other paths related to 'mapred'.

2. By contrast, the approach shown in this example is to use symbolic links into the QuantaStor Pools, thereby allowing the Hadoop configuration default values to remain.

The steps to accomplish option #2 above include, on each of the cluster nodes:

• $ mkdir /export/<sharename>/dfs

• $ mkdir /export/<sharename>/mapred

• $ ln -s /export/<sharename>/dfs /dfs

• $ ln -s /export/<sharename>/mapred /mapred

For example:

$ mkdir /export/osn-grid2a-pool1-share1/dfs $ ln -s /export/osn-grid2a-pool1-share1/dfs /dfs $ mkdir /export/osn-grid2a-pool1-share1/mapred $ ln -s /export/osn-grid2a-pool1-share1/mapred /mapred

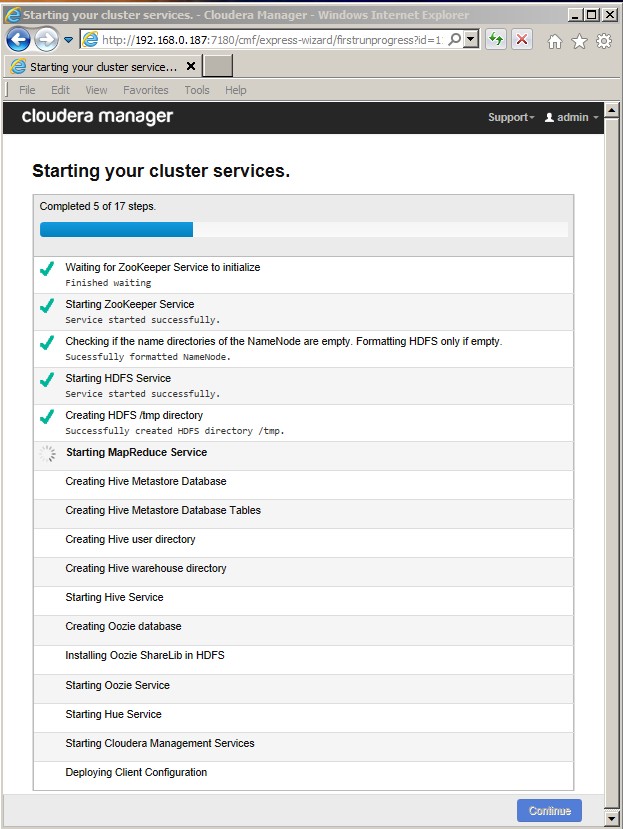

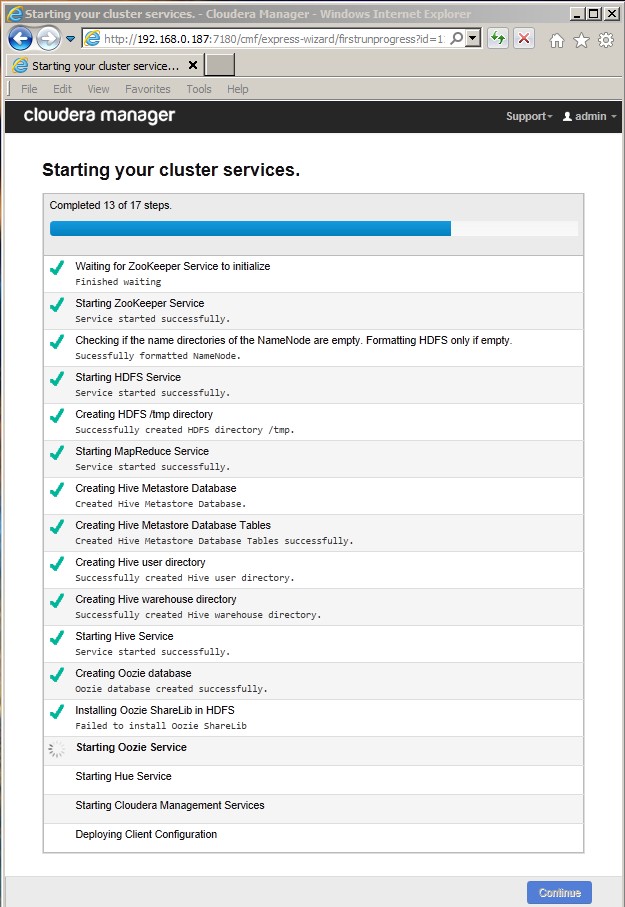

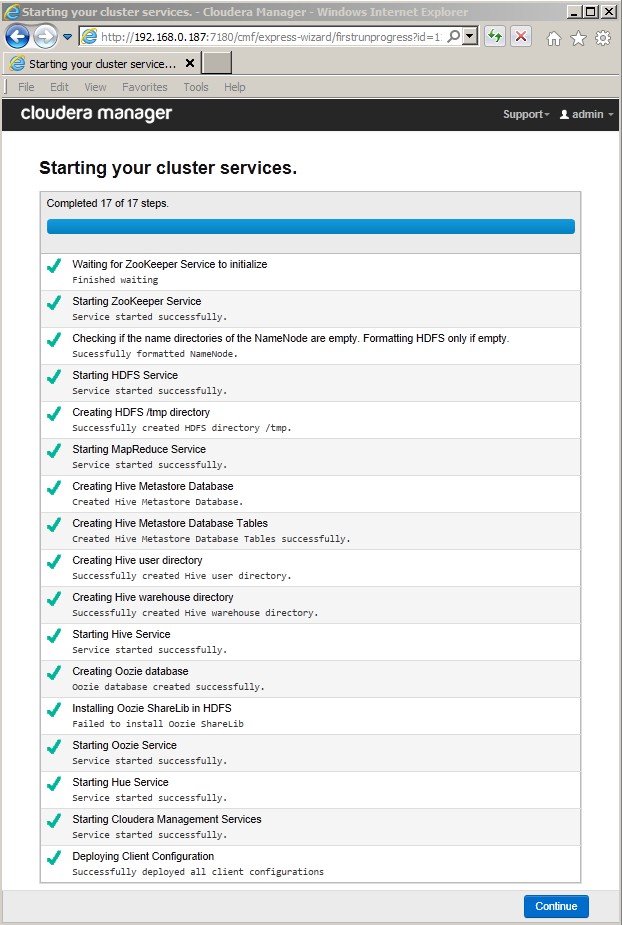

After accepting the "Review configuration changes" and hitting 'Continue', the installation proceeds to the "Starting your cluster services" screen (you may be prompted to re-enter your 'admin/admin' login credentials again), where it progresses through numerous steps, as shown in the example figures below. After all services are started, hitting 'Continue' should result in the "Congratulations Success" screen.

Phase 5. Starting Cluster Services

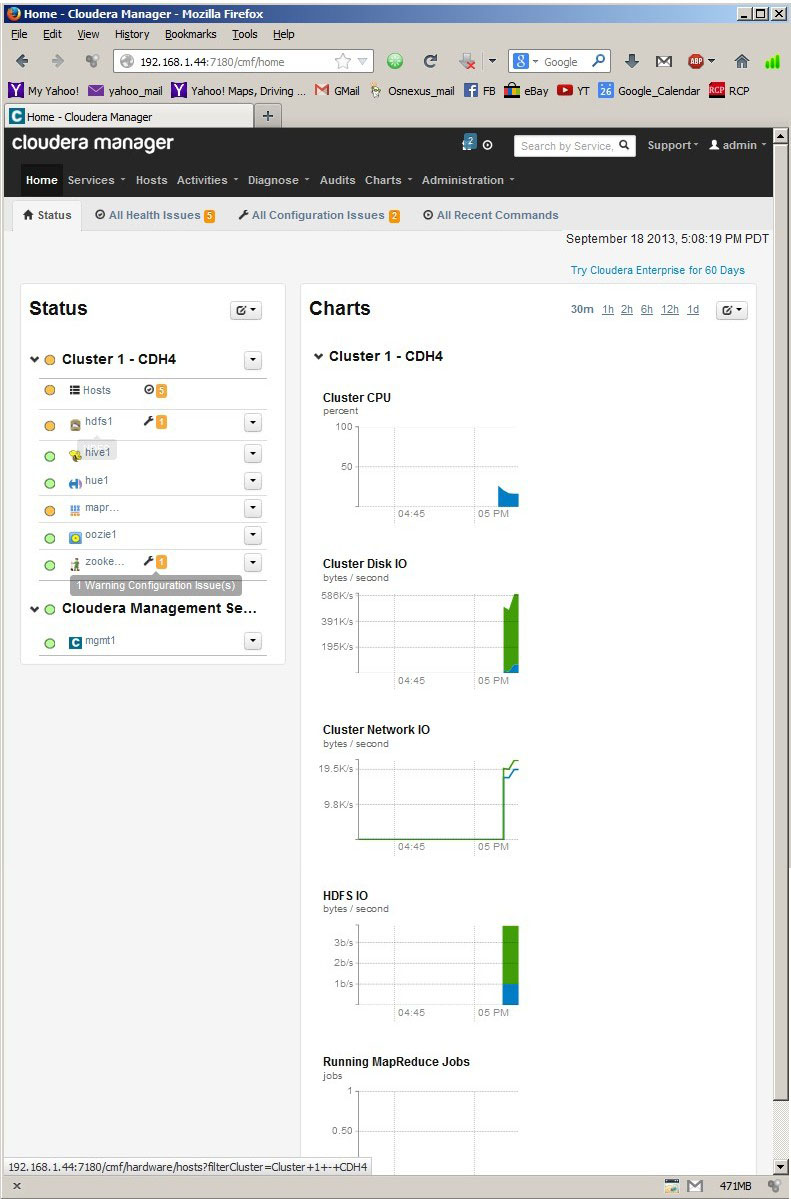

Hitting 'Continue' from here should then take you to the Manager Home screen, as shown in the example below.

Summary

That's the basics of getting Hadoop running with QuantaStor. Now you're ready to deploy See: Cloudera Installation Guide if you haven't done so already.

We are looking forward to automating some of the above configuration steps and adding deeper integration features to monitor CDH in future releases. If you have specific needs, ideas, or would like to share your feedback please write us at engineering@osnexus.com.

Thanks and Happy Hadooping!

Cloudera is a registered trademark of Cloudera Corporation, Hadoop is a registered trademark of the Apache Foundation, Ubuntu is a registered trademark of Canonical, and QuantaStor is a registered trademark of OS NEXUS Corporation.