Template:CephNetworkConfiguration

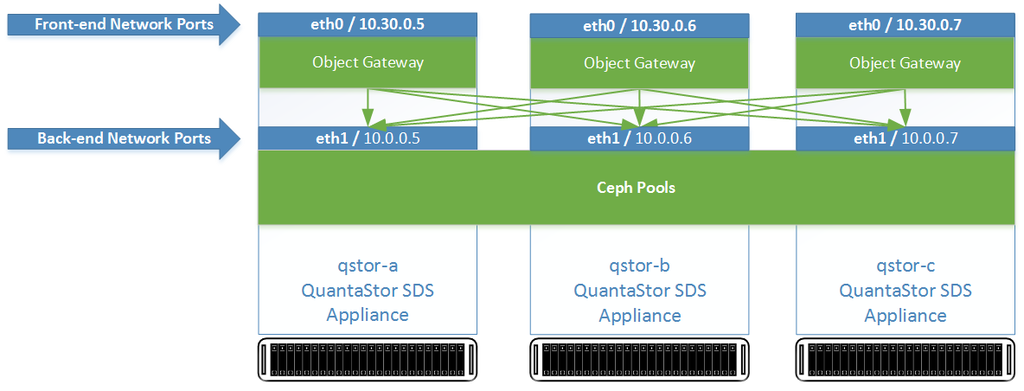

Networking for scale-out file and block storage deployments use a separate front-end and back-end network to cleanly separate the client communication to the front-end network ports (S3/SWIFT, iSCSI/RBD) from the inter-node communication on the back-end. This not only boosts performance, it increases the fault-tolerance, reliability and maintainability of the Ceph cluster. For all nodes one or more ports should be designated as the front-end ports and assigned appropriate IP addresses and subnets to enable client access. One can have multiple physical, virtual IPs, and VLANs used on the front-end network to enable a variety of clients to access the storage. The back-end network ports should all be physical ports but it is not required. A basic configuration looks like this:

Update the configuration of each network port using the Modify Network Port dialog to put it on either the front-end network or the back-end network. The port names should be consistently configured across all appliance nodes such that all ethN ports are assigned to the front-end or the back-end network but not a mix of both.

Enabling S3/SWIFT Gateway Access

When the S3/SWIFT checkbox is selected the port is no usable to access the QuantaStor web UI interface as it redirects port 80 and 443 traffic to the object storage deamon. A quick solution to the problem is to use the back-end ports for web management access or to add a virtual IP address on each node that will be used for S3/SWIFT object gateway access.