|

|

| (148 intermediate revisions by the same user not shown) |

| Line 1: |

Line 1: |

| − | == Highly-Available SAN/NAS Storage (Tiered SAN, ZFS based Storage Pools) ==

| + | __NOTOC__ |

| | + | <!-- Removed Overview header, replaced with Introduction and reduced to H2 for TOC alignment/navigation --> |

| | | | |

| − | === Minimum Hardware Requirements ===

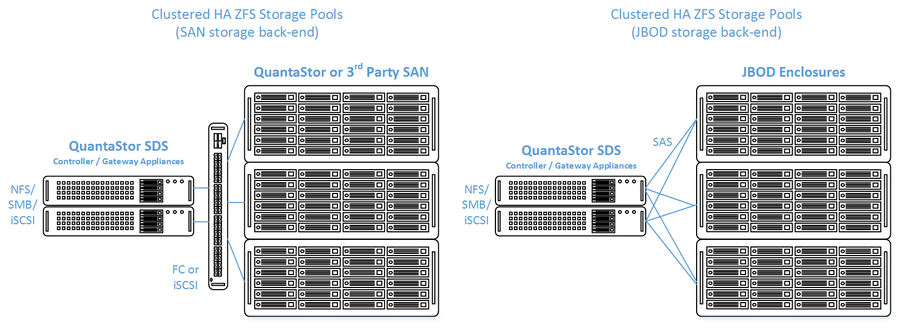

| + | OS Nexus provides two separate Administration Guides for the Clustered High Availability solution provided by QuantaStor based on the intended deployment/use-case scenario. These deployment methods are differentiated by the use of shared storage provisioning via iSCSI or Fiber-Channel (Using QuantaStor or 3rd Party SAN) or the use of shared SAS JBODS between Cluster Nodes. |

| | | | |

| − | * 2x (or more) QuantaStor appliances which will be configured as front-end ''controller nodes''

| + | '''Solution Diagrams:'''<br />[[File:Qs_clustered_san.png|900px]] |

| − | * 2x (or more) QuantaStor appliance configured as back-end ''data nodes'' with SAS or SATA disk

| + | |

| − | * High-performance SAN (FC/iSCSI) connectivity between front-end ''controller nodes'' and back-end ''data nodes''

| + | |

| | | | |

| − | === Setup Process === | + | == [[Clustered HA SAN/NAS with JBOD back-end|Clustered HA SAN/NAS with JBOD back-end / Administrators Guide (ZFS-based)]] == |

| | + | Please refer to the Clustered HA with JBOD back-end Administrators Guide if you will be using a shared SAS JBOD solution for your High-Availability Deployment. |

| | | | |

| − | ==== All Appliances ====

| |

| − | * Add your license keys, one unique key for each appliance

| |

| − | * Setup static IP addresses on each appliance (DHCP is the default and should only be used to get the appliance initially setup)

| |

| − | * Right-click on the storage system and set the DNS IP address (eg 8.8.8.8), and your NTP server IP address

| |

| | | | |

| − | ==== Back-end Appliances (Data Nodes) ==== | + | == [[Clustered HA SAN/NAS with iSCSI/FC SAN back-end|Clustered HA SAN/NAS with iSCSI/FC SAN back-end / Administrators Guide (ZFS-based) ]] == |

| − | * Setup each back-end ''data node'' appliance as per basic appliance configuration with one or more storage pools each with one storage volume per pool.

| + | Please refer to the Clustered HA with iSCSI/FC back-end Administrators Guide if you will be using an iSCSI or Fibre-Channel solution for your High-Availability Deployment. |

| − | ** Ideal pool size is 10 to 20 drives, you may need to create multiple pools per back-end appliance.

| + | |

| − | ** SAS is recommended but enterprise SATA drives can also be used

| + | |

| − | ** HBA(s) or Hardware RAID controller(s) can be used for storage connectivity

| + | |

| | | | |

| − | ==== Front-end Appliances (Controller Nodes) ====

| + | [[Category: Landing Page]] |

| − | * Connectivity between the front-end and back-end nodes can be FC or iSCSI

| + | |

| − | ===== FC Based Configuration =====

| + | |

| − | * Create ''Host'' entries, one for each front-end appliance and add the WWPN of each of the FC ports on the front-end appliances which will be used for intercommunication between the front-end and back-end nodes.

| + | |

| − | * Direct point-to-point physical cabling connections can be made in smaller configurations to avoid the cost of an FC switch. Here's a guide that can help with some [http://blog.osnexus.com/2012/03/20/understanding-fc-fabric-configuration-5-paragraphs/ advice on FC zone setup] for larger configurations using a back-end fabric.

| + | |

| − | * If you're using a FC switch you should use a fabric topology that'll give you fault tolerance.

| + | |

| − | * Back-end appliances must use Qlogic QLE 8Gb or 16Gb FC cards as QuantaStor can only present Storage Volumes as FC target LUNs via Qlogic cards.

| + | |

| − | | + | |

| − | ===== iSCSI Based Configuration =====

| + | |

| − | * It's important but not required to separate the networks for the front-end (client communication) vs back-end (communicate between the control and data appliances).

| + | |

| − | * For iSCSI connectivity to the back-end nodes, create a ''Software iSCSI Adapter'' by going to the Hardware Controllers & Enclosures section and adding a iSCSI Adapter. This will take care of logging into and accessing the back-end storage. The back-end storage appliances must assign their Storage Volumes to all the ''Hosts'' for the front-end nodes with their associated iSCSI IQNs.

| + | |

| − | * Right-click to Modify Network Port of each port you want to disable client iSCSI access on. If you have 10GbE be sure to disable iSCSI access on the slower 1GbE ports used for management access and/or remote-replication.

| + | |

| − | | + | |

| − | ==== HA Network Setup ====

| + | |

| − | * Make sure that eth0 is on the same network on both appliances

| + | |

| − | * Make sure that eth1 is on the same but separate network from eth0 on both appliances

| + | |

| − | * Create the ''Site Cluster'' with Ring 1 on the first network and Ring 2 on the second network, both front-end nodes should be in the ''Site Cluster'', back-end nodes can be left out. This establishes a redundant (dual ring) heartbeat between the front-end appliances which will be used to detect hardware problems which in turn will trigger a failover of the pool to the passive node.

| + | |

| − | ==== HA Storage Pool Setup ====

| + | |

| − | * Create a ''Storage Pool'' on the first front-end appliance (ZFS based) using the ''physical disks'' which have arrived from the back-end appliances.

| + | |

| − | ** QuantaStor will automatically analyze the disks from the back-end appliances and stripe across the appliances to ensure proper fault-tolerance across the back-end nodes.

| + | |

| − | * Create a ''Storage Pool HA Group'' for the pool created in the previous step, if the storage is not accessible to both appliances it will block you from creating the group.

| + | |

| − | * Create a Storage Pool Virtual Interface for the Storage Pool HA Group. All NFS/iSCSI access to the pool must be through the Virtual Interface IP address to ensure highly-available access to the storage for the clients.

| + | |

| − | * Enable the Storage Pool HA Group. Automatic Storage Pool fail-over to the passive node will now occur if the active node is disabled or heartbeat between the nodes is lost.

| + | |

| − | * Test pool failover, right-click on the Storage Pool HA Group and choose 'Manual Failover' to fail-over the pool to another node.

| + | |

| − | | + | |

| − | ==== Standard Storage Provisioning ====

| + | |

| − | * Create one or more ''Network Shares'' (CIFS/NFS) and ''Storage Volumes'' (iSCSI/FC)

| + | |

| − | * Create one or more ''Host'' entries with the iSCSI initiator IQN or FC WWPN of your client hosts/servers that will be accessing block storage.

| + | |

| − | * Assign Storage Volumes to client ''Host'' entries created in the previous step to enable iSCSI/FC access to Storage Volumes.

| + | |

| − | | + | |

| − | === Diagram of Completed Configuration ===

| + | |

| − | | + | |

| − | [[File:osn_zfsha_workflow_tiered.png|500px]] | + | |

Please refer to the Clustered HA with JBOD back-end Administrators Guide if you will be using a shared SAS JBOD solution for your High-Availability Deployment.

Please refer to the Clustered HA with iSCSI/FC back-end Administrators Guide if you will be using an iSCSI or Fibre-Channel solution for your High-Availability Deployment.