Scale-out Object Setup (ceph)

(NOTE THIS PAGE IS UNDER CONSTRUCTION AND WILL BE READY BY MID-MONTH, 3/15/2016)

Contents

- 1 Overview

- 2 Completed Configuration Diagram

- 3 Terminology

- 4 Creating a QuantaStor Grid

- 5 Network Configuration

- 6 Hardware RAID Configuration

- 7 NTP and DNS Configuration

- 8 Creating a Ceph Cluster

- 9 Creating OSDs and Journals

- 10 Creating Object Storage Groups

- 11 Creating Resource Domains

- 12 Management Operations

Overview

QuantaStor supports scale-out object storage via the S3 and SWIFT compatible REST based protocols with scalability to 64PB of storage and 64 appliances per grid. QuantaStor integrates with and extends Ceph storage technology to deliver scale-out block (iSCSI, Ceph RBD) and object storage (S3/SWIFT). Ceph is a highly-available and elastic storage technology that can scale from a small 3x appliance configuration to hyper-scale. Within a QuantaStor grid up to 20x individual Ceph clusters can be managed through a single pane of glass by logging into any appliance in the grid. Further, QuantaStor provides web UI management for all configuration and management operations making it easy to setup large complex configurations with ease in minutes. The following guide covers QuantaStor and Ceph terminology, then goes into the Ceph cluster configuration and setup process, then finishes with day-to-day management operations.

Minimum Hardware Requirements

To achieve quorum a minimum of three appliances are required. The storage in the appliance can be SAS or SATA HDD or SSDs but a minimum of 1x SSD is required for use as a journal (write log) device in each appliance. Appliances must use a hardware RAID controller for QuantaStor boot/system devices and we recommend using a hardware RAID controller for the storage pools as well.

- Intel Xeon or AMD Opteron CPU

- 64 GB RAM

- 3x QuantaStor storage appliances minimum (up to 64x appliances)

- 1x 200GB or larger high write endurance SSD/NVMe/NVRAM device for the write log / journal (we recommend 4x OSDs per journal device and no more than 8x OSDs per journal device)

- 5x to 100x HDDs or SSD for data storage per appliance

- 1x hardware RAID controller for OSDs (SAS HBA can also be used but RAID is faster)

Setup Process Overview

The following section is a step by step guide to setting up scale-out S3/SWIFT Object storage with a grid of 3x or more QuantaStor appliances.

- Login to the QuantaStor web management interface on each appliance, the default username & password is 'admin' / 'password' without the quotes. If the appliance was pre-configured use the credentials provided by your service provider to login as admin.

- Add your license keys, one unique key for each appliance by choosing the License Manager then adding one key per appliance. Scale-out storage configurations require Cloud Edition or Enterprise Edition license keys, one per appliance. Journal devices do not deduct from the licensed capacity.

- Setup static IP addresses on each node (DHCP is the default and should only be used to get the appliance initially setup)

- Right-click on the storage system, choose 'Modify Storage System..' and set the DNS IP address (eg: 8.8.8.8), and the NTP server IP address (important!)

- Setup separate front-end and back-end network ports (eg eth0 = 10.0.4.5/16, eth1 = 10.55.4.5/16) for S3/SWIFT and Ceph traffic respectively

- Create a Grid out of the 3 appliances (use Create Grid then add the other two nodes using the Add Grid Node dialog)

- Create Hardware RAID5 Units using 5 disks per RAID unit (4d + 1p) on each node until all HDDs have been used (see Hardware Enclosures & Controllers section for Create Hardware RAID Unit)

- Create Ceph Cluster using all the appliances in your grid that will be part of the Ceph cluster, 3x appliances minimum are required

- Use OSD Multi-Create to add storage and journals to the cluster. In that dialog you'll select some SSDs to be used as Journal Devices and the HDDs to be used for data storage across the cluster nodes.

- Create a Object Storage Group, this will automatically use all the available OSDs in the designated Ceph Cluster.

- Create a least one Ceph User Access entry to generate the Access Key and Secret Key that you'll need to access the object storage via S3 and/or SWIFT

At this point everything is configured and the object storage gateways are online and usable via the S3/SWIFT protocols.

Completed Configuration Diagram

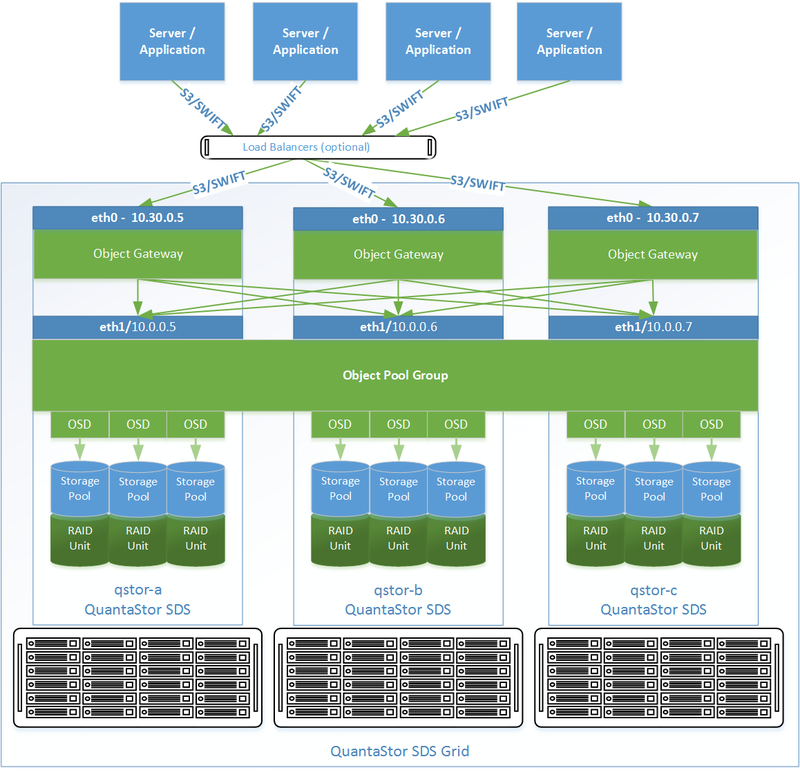

The following diagram shows a simple 3x appliance grid (scalable to 64x appliances) which delivers object storage to a set of servers and applications via the S3/SWIFT protocol.

Terminology

What is a Ceph Cluster?

A ceph cluster is a group of three or more appliances that have been clustered together using the ceph storage technology. When a cluster is initially created QuantaStor configures the first three appliances to have active Ceph Monitor services running. On configurations with more than 16 nodes two additional monitors should be setup can this can be done through the QuantaStor WUI in the Scale-out Block & Object section. Note that when the Ceph Cluster is initially created there is no storage associated with it (OSDs), only monitors.

What is a Ceph Monitor?

The Ceph Monitors form a paxos part-time parliment cluster for the management of cluster membership, configuration information, and state. Paxos is an algorithm (developed by Leslie Lamport in the late 80s) which uses a three-phase consensus protocol to ensure that cluster updates can be done in a fault-tolerant timely fashion even in the event of a node outage or node that is acting improperly. Ceph uses the algorithm so that the membership, configuration and state information is updated safely across the cluster in an efficient manner. Since the algorithm requires a quorum of nodes to agree on any given change an odd number of appliances (three or more) are required for any given Ceph cluster deployment. Monitors startup automatically when the appliance starts and the status and health of monitors is monitored by QuantaStor and displayed in the web UI. A minimum of two ceph monitors must be online at all times so in a three node configuration two of the three appliances must be online for the storage to be online and available. In configurations with more than 16 nodes 5x or more monitors should be deployed (additional monitors can be deployed via the QuantaStor Web UI).

What is an OSD?

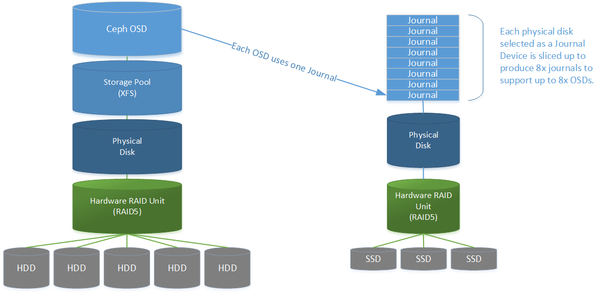

OSD stands for Ceph Object Storage Daemon and it's a Ceph daemon process that reads and writes data. Each Ceph cluster in QuantaStor must have at least 9x OSDs (3x OSDs per appliance) and each OSD is attached to a single Storage Pool. When a client writes data to a Ceph Pool (whether it's via a iSCSI/RBD block device or via the S3/SWIFT gateway) the data is spread out across the OSDs in the cluster. QuantaStor OSDs are always attached to XFS based Storage Pools and are always assigned a journal device. Because the creation of OSDs, the underlying Storage Pools for them, and their associated Journal devices is a multi-step process QuantaStor has a Multi-OSD Create configuration dialog which does all of these configuration steps for an entire cluster in a single dialog. This makes it easy to setup even hyper-scale Ceph deployments in minutes.

What is a Journal Device?

Writes are never cached, and that's important. This is good because it ensures that every write is written to stable media (the disk devices) before Ceph acknowledges to a client that the write is complete. In the event of a power outage while a write is in flight there is no problem as the write is only complete once it's on stable media. The cluster will work around the bad node until it comes back online and re-synchronizes with the cluster. The down side to never caching writes is performance. HDDs are slow due to rotational latency and seek times for spinning disk are high. The solution is to log every write to fast persistent solid state media (SSD, NVMe, X Point, NVDIMM, etc) which is called a journal device. Once written to the journal Ceph can return to the client that the write is "complete" (event though it has not been written out to the slow HDDs) since at that point the data can be recovered automatically from the journal device in the event of a power outage. A second copy of the data is held in RAM and is used to lazily write the data out to disk. In this way the journal device is only used as a write log but never needs to be re-read from. Datacenter grade or Enterprise grade SSDs must be used for Ceph journal devices. Desktop SSD devices are fast for a few seconds then write performance drops significantly, they wear out very quickly, and OSNEXUS will not certify the use of desktop SSD in any production deployment of any kind. By example, we tested with a popular desktop SSD device which produces 600MB/sec but performance quickly dropped to just 30MB/sec after just a few seconds of sustained write load.

Within QuantaStor any block device in the Physical Disks section can be turned into a Journal Device but only high performance write-endurance enterprise SSD, NVMe or PCI SSD devices should be used. A device that is assigned to be a Journal Device is sliced up into 8x journal partitions so that up to 8x OSDs can be supported by the Journal Device. For an appliance with 20x OSDs one would want at least 3x SSDs but in practice one would use more SSDs to ensure even distribution of load across the Journal Devices. Note that with a hardware RAID controller multiple SSDs can be combined using RAID5 to make a high-performance fault-tolerant journal. We recommend using PCI SSD, NVMe devices, multiple SSDs in RAID5 or a pair in RAID1. One can also use a dedicated RAID controller for the RAID5 based SSD journal devices to further boost performance. Again, each of these logical or physical devices is sliced up into 8x journal partitions so that up to 8x OSDs can be supported per Journal Device.

What is a Placement Group (PG)?

Placement groups effectively implement mirroring (or erasure coding) of data across OSDs according to the configured replica count for a given Ceph Pool. When a Ceph Pool is created the user specifies how many copies of the data must be maintained by that pool to ensure a given level of high-availability and fault-tolerance (usually 2 copies when using hardware RAID or 3 copies otherwise). Ceph in turn creates a series of Placement Groups as directed by QuantaStor to be associated with the Ceph Pool. Think of the placement groups as logical mini-mirrors in a RAID10 configuration. Each placement group is either a two-way, three-way or 4-way mirror across 2, 3, or 4 OSDs respectively. Since the number of OSDs will grow over the life of the cluster QuantaStor allocates a large number of PGs for each Ceph Pool to facilitate even distribution of data across OSDs and for future expansion as OSDs are added. In this way Ceph can very efficiently re-organize and re-balance PGs to mirror across new OSDs as they are added. (the PG count stays fixed as OSDs are added but one can run a maintenance command to increase the PG count for a Ceph Pool if the PG count gets low relative to the number of OSDs in the Pool. In general the PG count should be roughly 10x to 100x higher than the OSD count for a given Ceph Pool). Just like in RAID10 technology a PG can become degraded if one or more copies is offline and Ceph is designed to keep running even in a degraded state so that whole systems can go offline without any disruption to clients accessing the cluster. Ceph also automatically repairs and updates the offline PGs once the offline OSDs come back online online and if the offline appliance doesn't come back online in a reasonable amount of time the cluster will auto heal itself by adjusting the PGs swap out the offline OSDs with good online OSDs. In this way a cluster will automatically heal a Ceph Pool back to 100% automatically. Also, if an OSD is explicitly removed, the PGs referencing it are re-balanced and re-organized across the remaining OSDs to recover the system back to 100% health on the remaining OSDs.

What is a Object Storage Group?

S3/SWIFT object storage gateways require the creation and management of several Ceph Pools which together represent a region+zone for the storage of objects and buckets. QuantaStor groups all the Ceph Pools used to manage a given object storage configuration into what is called a Object Storage Group. QuantaStor also automatically deploys and manages Ceph S3/SWIFT Object Gateways on all appliances in the cluster that were selected as gateway nodes when the Object Storage Group was created (additional gateways can be deployed on new or existing nodes at any time). Note that Object Storage Groups are a QuantaStor construct so you won't find documentation about it in general Ceph documentation.

What are User Object Access Entries?

Access to object storage via S3 and SWIFT requires a Access Key and a Secret Key just like with Amazon S3 storage. Each User Object Access Entry is a Access Key + Secret Key pair which is associated with a Ceph Cluster and Object Storage Group. You must allocate at least one User Object Access Entry to read/write buckets and objects to an Object Storage Group via the Ceph S3/SWIFT Gateway.

What is a Resource Domain? / Understanding CRUSH Maps

Ceph has the ability to organize the placement groups (which mirror data across OSDs) so that the PGs mirror across OSDs in such a way that high-availability and fault-tolerance is maintained even in the event of a rack or site outage. A rack of appliances, a site, or building each represent a failure-domain and given the information about where appliances are deployed (site, rack, chassis, etc) a map can be created so that the PGs are intelligently laid out to ensure high-availability in the event of an outage of one or more failure-domains depending on the level of redundancy. This intelligent map of how to mirror the data to ensure optimal performance and availability is called a Ceph CRUSH (Controlled, Scalable, Decentralized Placement of Replicated Data) map. QuantaStor creates and sets up CRUSH maps automatically so that administrators do not need to manually set the up, a process that can be quite complex. To facilitate automatic CRUSH map management one must input some information which tells where the QuantaStor appliances are deployed. This is done by creating a tree of Resource Domains via the Web UI (or via CLI/REST APIs) to organize the appliances in a given QuantaStor grid into sites, buildings and racks. Using this information QuantaStor automatically generates the best CRUSH map automatically when pools are provisioned to ensure optimal performance and high-availability. Custom CRUSH map changes can still be made to adjust the map after the pool(s) are created and OSNEXUS provides consulting services to meet special requirements. Resource Domains are a QuantaStor construct so you will not find mention of them in general Ceph documentation but they map closely to the CRUSH bucket hierarchy.

Creating a QuantaStor Grid

Network Configuration

Hardware RAID Configuration

Ceph has the ability to repair itself in the event of the loss of one or more OSDs which can be provisioned one-to-one with each Storage Pool which is one-to-one with a HDD. In QuantaStor deployments we support this style of deployment but our reference configurations always use hardware RAID to combine disks in to 5x disk RAID5 groups for several reasons:

- Disk failures have no impact on network load since they're repaired using a hot-spare device associated with the RAID controller

- Journals can be made fault-tolerant and easily maintained by configuring them into RAID1 or RAID5 units.

- Multiple DC grade SSDs can be combined to make ultra high-performance and high-endurance Journal Devices and the sequential nature of the journal writes lend well to the write patterns for RAID5.

- Disk drives are easy to replace with no knowledge of Ceph required. Simply remove the bad drive identified by the RED LED and replace it with a good drive. The RAID controller will absorb the new drive and automatically start repairing the degraded array.

- When the storage is fault-tolerant one need only maintain two (2) copies of the data (instead of 3x) so the storage efficiency is 40% usable vs. the standard Ceph mode of operation which is only 33% usable. That's a 20% increase in usable capacity.

- RAID controllers bring with them 1GB of NVRAM write-back cache which greatly boosts the performance of OSDs and Journal devices. (Be sure that the card has the CacheVault/MaxCache supercapacitor which is required to protect the write cache).

- Reduces the OSD and placement group count by 5x or more which allows the cluster to scale that much bigger and with reduced complexity (a cluster with 100,000 PGs use less RAM per appliance and is easier for Ceph monitors to manage than 500,000 PGs).

Besides the nominal extra cost associated with a SATA/SAS RAID Controller vs a SATA/SAS HBA we see few benefits and many drawbacks to using HBAs with Ceph. Some in the Ceph community prefer to let bad OSD devices fail in-place never to be replaced as a maintenance strategy. Our preference is to replace bad HDDs to maintain 100% of the designed capacity for the life of the cluster. Hardware RAID makes that especially easy and QuantaStor has integrated management for all major RAID controller models (and HBAs) via the Web UI (and CLI/REST). QuantaStor's web UI also has an enclosure management view so that it's easy to identify which drive is bad and where it is located in the server chassis.