Overview (Getting Started): Difference between revisions

mNo edit summary |

|||

| (41 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

Getting Started | [[File:Get Started - Sys Setup.jpg|400px|thumb|Quickly setup Storage Systems.]] | ||

The "Getting Started" or "Configuration Guide" in QuantaStor serves as a comprehensive resource that provides instructions, recommendations, and best practices for setting up and configuring QuantaStor storage management software. | |||

The purpose of the "Getting Started" or "Configuration Guide" is to assist users in the initial setup and configuration of QuantaStor. It typically covers essential topics and tasks, such as: | |||

'''Navigation:''' Storage Management --> Storage System --> Storage System --> Getting Started ''(toolbar)'' | '''Navigation:''' Storage Management --> Storage System --> Storage System --> Getting Started ''(toolbar)'' | ||

=''Initial Setup''= | |||

= | [[File:Get Started - Sys Setup.jpg|400px|thumb|Initial Storage System setup procedures.]] | ||

[[File:Get | *'''System Setup''': Setting up a new Storage System should always start with adding a license key in order to unlock all the core management features. After applying a license to each system be sure to change the 'admin' user password and then configure each network port with a static IP via the 'Storage Systems' section. | ||

Setting up a new Storage System should always start | **'''Add License''': Each storage system requires a license key to enable storage management operations. To add a license key, press the button to the left and then enter the license key block you received via email. If you don't have a license key, please contact support or visit osnexus.com to get a temporary license key. | ||

**'''Activate License''': Each license key must be activated within the first two weeks after being applied to a system. This requires the system to have Internet network access to the OSNEXUS license server. If the system doesn't have Internet access, the offline activation process may be used. | |||

**'''Set Password''': Change the main 'admin' user account password to ensure only authorized access to the Storage System and Storage Grid.[[File:Get Started - Stor Grid Setup.jpg|400px|thumb|Initial Storage Grid setup procedures.]]<br><br><br><br><br><br><br><br><br><br> | |||

*'''Storage Grid Setup''': Storage Grid technology makes it easy to manage large numbers of Storage Systems as one distributed system. Within a Storage Grid one can create and manage multiple scale-out and scale-up storage clusters within and across sites. | |||

**'''Create Storage Grid''': Combine Storage Systems within and across sites into a Storage Grid to simplify management of storage clusters and to enable features such as remote-replication. | |||

**'''Add System to Grid''': Add Storage Systems to the Storage Grid created in the previous step. | |||

**'''Update DNS & NTP''': Apply common network settings including the domain suffix, NTP, and DNS to a group of Storage Systems. | |||

**'''Update Time Zone''': Adjust the time zone settings for each system to match the zone for where it is physically deployed. | |||

**'''Configure Alerting''': Configure the Alert Manager to send alert notifications via email, SNMP, Slack, Pagerduty and/or other call-home options to ensure IT staff is able to quickly address maintenance issues.[[File:Get Started - Security Setup.jpg|400px|thumb|Initial Security setup procedures.]]<br><br><br><br><br><br> | |||

*'''Security Setup''': Configure security settings to meet your organizational security compliance requirements. Security settings are applied storage grid wide including to any new systems added to the storage grid in the future. | |||

**'''Confirgure Security Policy''': Configure the password policy settings to meet NIST, HIPAA, and other compliance standards. | |||

**'''Join AD Domaine''': Each Storage System may be joined to an Active Directory (AD) domain to provide SMB access to AD users and AD groups. | |||

**'''Configure Sytem Firewall''': Configure the network firewall settings to block protocols that will not be used.<br><br><br><br><br><br><br><br><br><br> | |||

[[File:Get | |||

<br> | |||

<br><br><br><br> | |||

- | |||

[[File:Get | |||

<br> | |||

<br><br><br><br><br><br><br><br><br><br> | |||

== | =''Pool Setup''= | ||

[[File:Get | [[File:Get Started - Scale Up Pool.jpg|400px|thumb|Scale-up Storage Pools may be setup in single-node mode or clustered HA mode.]] | ||

*'''Setup Scale-up Storage Pool''': Scale-up Storage Pools may be setup in single-node mode or clustered HA mode. HA Storage Pools require at least two Storage Systems and the storage media that makes up the Storage Pool must support IO fencing. Media that supports IO fencing include dual-ported SAS, dual-ported NVMe, FC, and iSCSI devices. All access to clustered HA Storage Pools is done via a clustered VIFs to ensure continuous data availability in the event the Storage Pool is moved to its paired Storage System via HA fail-over. | |||

**'''Create Site Cluster''': Creates a Site Cluster configuration for two or more Storage Systems within the Storage Grid. Two Cluster Rings for heart-beat should be configured on each Site Cluster configuration for redundancy. | |||

**'''Add Cluster Ring''': Creates an additional Cluster Ring in the selected Site Cluster configuration. Having two cluster rings per site cluster is highly recommended. | |||

**'''Create Storage Pool''': Select the disks and configuration settings to create a new storage pool designed for your application and/or virtual machine workloads. | |||

**'''Create HA Group''': Associating a Storage Pool with a High-availability (HA) Group enables one to move the pool between a pair of Storage Systems and perform automatic failovers between the paired Storage Systems in the event of a hardware, network or software issue. HA Groups that have no associated Site Cluster will not be able to perform automatic failovers in System failure events. | |||

**'''Add Pool HA VIF''': Storage Pool high-availability virtual interfaces (HA VIFs) attach to and move with their associated HA Storage Pool. This ensures continuous access to a given Storage Pool via its associated Storage Volumes and Network Shares.<br><br><br>[[File:Get Started - Scale-out Object Pool.jpg|400px|thumb|cale-out object storage configurations deliver S3 object storage via S3-compatible buckets that may also be accessed as file storage via NFS. Object storage is typically setup with an erasure-coding layout to ensure fault-tolerance and high-availability. A scale-out storage cluster using three (3) or more Storage Systems must be setup first.]] | |||

**'''Setup Scale-out Object Pool''': Scale-out object storage configurations deliver S3 object storage via S3-compatible buckets that may also be accessed as file storage via NFS. Object storage is typically setup with an erasure-coding layout to ensure fault-tolerance and high-availability. A scale-out storage cluster using three (3) or more Storage Systems must be setup first. | |||

***'''Create Cluster''': Scale-out storage clusters can simultaneously deliver file, block and object storage and must be comprised of at least three (3) or more Storage Systems. | |||

***'''Create OSD's & Journals''': Devices must be assigned to the cluster before storage pools can be provisioned. Systems using HDDs must also have some SSDs in each system to accelerate write performance and metadata access via Journal Groups. | |||

***'''Create Object Pool Group''': An Object Pool Group must be created before S3 storage buckets can be provisioned. | |||

***'''Add S3 Gateway''': Select the storage cluster nodes to deploy new S3 Gateways services instances onto. Users will be able to access their object storage buckets via these nodes and it is recommended to deploy S3 Gateways on all nodes for best performance. | |||

***'''Create S3 User''': Create one or more S3 User (each contains an 'access key' and 'secret key') for accessing S3 Buckets. | |||

***'''Create S3 Bucket''': Create one or more buckets for writing object storage via the S3 protocol.[[File:Get Started - Scale-out File Pool.jpg|400px|thumb|Scale-out file Storage Pools are POSIX-compliant and deliver high performance NFS, SMB, and native CephFS client access. Scale-out Storage Pools are highly-available and can utilize two or more metadata service instances (MDS) to load balance and scale locking performance.]]<br><br><br><br><br><br><br><br><br> | |||

**'''Setup Scale-out File Pool''': Scale-out file Storage Pools are POSIX-compliant and deliver high performance NFS, SMB, and native CephFS client access. Scale-out Storage Pools are highly-available and can utilize two or more metadata service instances (MDS) to load balance and scale locking performance. | |||

***'''Create Cluster''': Scale-out storage clusters deliver file, block and S3 object storage based and must be comprised of at least three (3) or more Storage Systems. | |||

***'''Create OSD's & Journals''': Devices must be assigned to the cluster before a scale-out NAS-based storage pool can be provisioned. Systems using HDDs must also have some SSDs in each system to accelerate write performance and metadata access via Journal Groups. | |||

***'''Add MDS''': Two or more scale-out file-system Meta-data Service (MDS) instances must be allocated to collectively manage file locking. | |||

***'''Create File Storage Pool''': Create a new scale-out Storage Pool to provide distributed NFS, SMB, and native CephFS access to Network Shares within the pool. | |||

***'''Create Share''': Create one or more Network Shares to deliver file storage via the NFS and SMB protocols.[[File:Get Started - Scale-out Block Pool.jpg|400px|thumb|Scale-out block Storage Pools provide highly-available block storage via the iSCSI, Fibre-Channel, and NVMeoF protocols as well as via the native Ceph RBD protocol.]]<br><br><br><br><br><br><br><br><br><br><br> | |||

**'''Setup Scale-out Block Pool''': Scale-out block Storage Pools provide highly-available block storage via the iSCSI, Fibre-Channel, and NVMeoF protocols as well as via the native Ceph RBD protocol. | |||

***'''Create Cluster''': Scale-out storage clusters deliver file, block and S3 object storage and must be comprised of at least (3) or more Storage Systems. | |||

***'''Create OSD's & Journals''': Devices must be assigned to the cluster before Storage Pools can be provisioned. Systems using HDDs must also have some SSDs in each system to accelerate write performance and metadata access via Journal Groups. | |||

***'''Create Pool''': Create a scale-out block Storage Pool for provisioning iSCSI/FC/NVMeoF accessible Storage Volumes which are accessible to client hosts via all nodes of the cluster. | |||

***'''Create Storage Volume''': Create one or more Storage Volumes from the scale-out block Storage Pool.<br><br><br><br><br><br><br><br> | |||

=''Provisioning Setup''= | |||

[[File:Get Started - Provision Files Storage.jpg|400px|thumb|Network Shares may be accessed via both the NFS and the SMB file storage protocols. A Storage Pool (scale-up or scale-out) must be created before Network Shares may be provisioned.]] | |||

Network Shares may be accessed via both the NFS and the SMB file storage protocols. A Storage Pool (scale-up or scale-out) must be created before Network Shares may be provisioned. | |||

*'''Create Share''': Create a Network Share in a Storage Pool to provide users with file storage via the SMB and NFS protocols. | |||

**'''Add NFS Access''': Each network share may have one or more NFS client access entry. Each entry specifies access via IP address or subnet which is allowed access to a given network share via the NFS protocol. The default [public] entry provides NFS access via all IP addresses. | |||

**'''Modify Network Share''': Modify the network share to assign access to specific users and to tune the share for a specific workload by adjusting the record size and compression mode.<br><br><br><br><br><br>[[File:Get Started - Provision Block Storage.jpg|400px|thumb|Block storage devices are referred to as Storage Volumes. Storage Volumes may be accessed via both the iSCSI and the FC protocol on systems with the required hardware. Since Storage Volumes are provisioned from Storage Pools, a pool (ZFS or Ceph) must be created first.]]<br><br><br><br><br> | |||

*'''Provision Block Storage''': Block storage devices are referred to as Storage Volumes. Storage Volumes may be accessed via both the iSCSI and the FC protocol on systems with the required hardware. Since Storage Volumes are provisioned from Storage Pools, a pool (ZFS or Ceph) must be created first. | |||

**'''Create Storage Volume''': Block storage devices are referred to as Storage Volumes (aka LUNs) and can be accessed via iSCSI, FC, and NVMeoF protocols. Storage Volume(s) must be assigned to one or more Hosts before they may be accessed. | |||

**'''Add Host''': Add one or more Host entries (servers, VMs, etc) with their associated initiator IQN, NQN, or FC WWPNs so that one or more Storage Volumes can be assigned to them. | |||

**'''Assign Volumes''': Once a Storage Volume has been provisioned, it must be assigned to one or more Hosts and/or Host Groups in order for it to be accessed.<br><br><br><br><br><br><br><br><br><br><br> | |||

=''Mics Setup''= | |||

[[File:Get Started - Remote-Repl.jpg|400px|thumb|Remote-replication enables one to asynchronously replicate Storage Volumes and Network Shares from any Storage Pool to a destination Storage Pool within a given Storage Grid. Replication is automated via a Replication Schedules so that it can be used as part of a business continuity and DR site fail-over plan.]] | |||

*'''Setup Remote-Replication''': Remote-replication enables one to asynchronously replicate Storage Volumes and Network Shares from any Storage Pool to a destination Storage Pool within a given Storage Grid. Replication is automated via a Replication Schedules so that it can be used as part of a business continuity and DR site fail-over plan. | |||

**'''Create Replication Link''': Storage system links enable replication of volumes and shares between storage systems. Select the storage systems to establish a replication link for and a security relationship will be established to enable remote-replication capabilities. Links are bidirectional enabling replication to and from the specified systems. | |||

**'''Create Schedule''': Replication schedules can be setup to replicate on a interval cycle or at specific hours and days of the week. Before a replication schedule can be setup between a pair of systems you must first create a Storage System Replication Link between them. | |||

**'''Trigger Replication Schedule''': Select the Replication Schedule to be activated immediately.[[File:Get Started - Cloud Integration.jpg|400px|thumb|Storage Systems can be connected to one or more major public cloud providers to provide local NAS gateway access to object storage and a means of automated backup and tiering via Backup Policies.]]<br><br><br><br><br><br><br><br><br><br><br><br><br><br> | |||

*'''Setup Cloud Integration''': Storage Systems can be connected to one or more major public cloud providers to provide local NAS gateway access to object storage and a means of automated backup and tiering via Backup Policies. | |||

**'''Add Cloud Provider Credential''': Add the access key for one or more of your cloud storage accounts so that new Cloud Storage Containers can be created or imported. | |||

[[File:Get | **'''Add/Import Cloud Storage Container''': Import existing object storage from your Cloud Provider to make it accessible as a Network Share via the NFS and SMB protocols. | ||

**'''Create Cloud Storage Container''': Creates a new bucket/container in the cloud and maps that to a new Cloud Storage Container. | |||

Remote-Replication enables one to asynchronously replicate | **'''Create Backup Policy''': Create a Backup Policy that will copy or move data into the cloud for you automatically. | ||

- | |||

<br><br><br><br><br><br><br><br><br><br> | |||

<br><br><br><br> | <br><br><br><br> | ||

| Line 131: | Line 79: | ||

{{Template:ReturnToWebGuide}} | {{Template:ReturnToWebGuide}} | ||

[[Category: | [[Category:QuantaStor6]] | ||

[[Category:WebUI Dialog]] | [[Category:WebUI Dialog]] | ||

[[Category:Requires Review]] | |||

Revision as of 18:21, 14 November 2024

The "Getting Started" or "Configuration Guide" in QuantaStor serves as a comprehensive resource that provides instructions, recommendations, and best practices for setting up and configuring QuantaStor storage management software.

The purpose of the "Getting Started" or "Configuration Guide" is to assist users in the initial setup and configuration of QuantaStor. It typically covers essential topics and tasks, such as:

Navigation: Storage Management --> Storage System --> Storage System --> Getting Started (toolbar)

Initial Setup

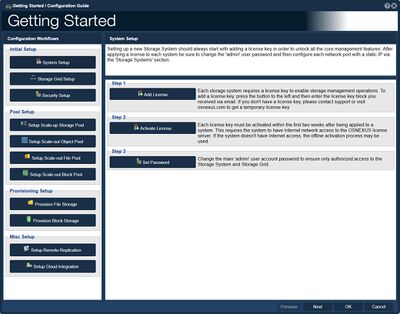

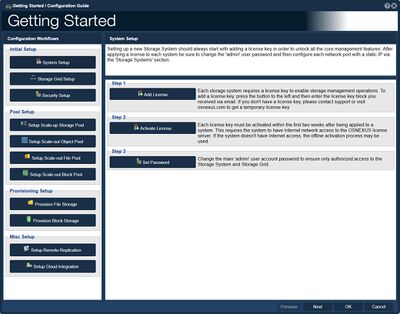

- System Setup: Setting up a new Storage System should always start with adding a license key in order to unlock all the core management features. After applying a license to each system be sure to change the 'admin' user password and then configure each network port with a static IP via the 'Storage Systems' section.

- Add License: Each storage system requires a license key to enable storage management operations. To add a license key, press the button to the left and then enter the license key block you received via email. If you don't have a license key, please contact support or visit osnexus.com to get a temporary license key.

- Activate License: Each license key must be activated within the first two weeks after being applied to a system. This requires the system to have Internet network access to the OSNEXUS license server. If the system doesn't have Internet access, the offline activation process may be used.

- Set Password: Change the main 'admin' user account password to ensure only authorized access to the Storage System and Storage Grid.

Initial Storage Grid setup procedures.

- Storage Grid Setup: Storage Grid technology makes it easy to manage large numbers of Storage Systems as one distributed system. Within a Storage Grid one can create and manage multiple scale-out and scale-up storage clusters within and across sites.

- Create Storage Grid: Combine Storage Systems within and across sites into a Storage Grid to simplify management of storage clusters and to enable features such as remote-replication.

- Add System to Grid: Add Storage Systems to the Storage Grid created in the previous step.

- Update DNS & NTP: Apply common network settings including the domain suffix, NTP, and DNS to a group of Storage Systems.

- Update Time Zone: Adjust the time zone settings for each system to match the zone for where it is physically deployed.

- Configure Alerting: Configure the Alert Manager to send alert notifications via email, SNMP, Slack, Pagerduty and/or other call-home options to ensure IT staff is able to quickly address maintenance issues.

Initial Security setup procedures.

- Security Setup: Configure security settings to meet your organizational security compliance requirements. Security settings are applied storage grid wide including to any new systems added to the storage grid in the future.

- Confirgure Security Policy: Configure the password policy settings to meet NIST, HIPAA, and other compliance standards.

- Join AD Domaine: Each Storage System may be joined to an Active Directory (AD) domain to provide SMB access to AD users and AD groups.

- Configure Sytem Firewall: Configure the network firewall settings to block protocols that will not be used.

Pool Setup

- Setup Scale-up Storage Pool: Scale-up Storage Pools may be setup in single-node mode or clustered HA mode. HA Storage Pools require at least two Storage Systems and the storage media that makes up the Storage Pool must support IO fencing. Media that supports IO fencing include dual-ported SAS, dual-ported NVMe, FC, and iSCSI devices. All access to clustered HA Storage Pools is done via a clustered VIFs to ensure continuous data availability in the event the Storage Pool is moved to its paired Storage System via HA fail-over.

- Create Site Cluster: Creates a Site Cluster configuration for two or more Storage Systems within the Storage Grid. Two Cluster Rings for heart-beat should be configured on each Site Cluster configuration for redundancy.

- Add Cluster Ring: Creates an additional Cluster Ring in the selected Site Cluster configuration. Having two cluster rings per site cluster is highly recommended.

- Create Storage Pool: Select the disks and configuration settings to create a new storage pool designed for your application and/or virtual machine workloads.

- Create HA Group: Associating a Storage Pool with a High-availability (HA) Group enables one to move the pool between a pair of Storage Systems and perform automatic failovers between the paired Storage Systems in the event of a hardware, network or software issue. HA Groups that have no associated Site Cluster will not be able to perform automatic failovers in System failure events.

- Add Pool HA VIF: Storage Pool high-availability virtual interfaces (HA VIFs) attach to and move with their associated HA Storage Pool. This ensures continuous access to a given Storage Pool via its associated Storage Volumes and Network Shares.

cale-out object storage configurations deliver S3 object storage via S3-compatible buckets that may also be accessed as file storage via NFS. Object storage is typically setup with an erasure-coding layout to ensure fault-tolerance and high-availability. A scale-out storage cluster using three (3) or more Storage Systems must be setup first. - Setup Scale-out Object Pool: Scale-out object storage configurations deliver S3 object storage via S3-compatible buckets that may also be accessed as file storage via NFS. Object storage is typically setup with an erasure-coding layout to ensure fault-tolerance and high-availability. A scale-out storage cluster using three (3) or more Storage Systems must be setup first.

- Create Cluster: Scale-out storage clusters can simultaneously deliver file, block and object storage and must be comprised of at least three (3) or more Storage Systems.

- Create OSD's & Journals: Devices must be assigned to the cluster before storage pools can be provisioned. Systems using HDDs must also have some SSDs in each system to accelerate write performance and metadata access via Journal Groups.

- Create Object Pool Group: An Object Pool Group must be created before S3 storage buckets can be provisioned.

- Add S3 Gateway: Select the storage cluster nodes to deploy new S3 Gateways services instances onto. Users will be able to access their object storage buckets via these nodes and it is recommended to deploy S3 Gateways on all nodes for best performance.

- Create S3 User: Create one or more S3 User (each contains an 'access key' and 'secret key') for accessing S3 Buckets.

- Create S3 Bucket: Create one or more buckets for writing object storage via the S3 protocol.

Scale-out file Storage Pools are POSIX-compliant and deliver high performance NFS, SMB, and native CephFS client access. Scale-out Storage Pools are highly-available and can utilize two or more metadata service instances (MDS) to load balance and scale locking performance.

- Setup Scale-out File Pool: Scale-out file Storage Pools are POSIX-compliant and deliver high performance NFS, SMB, and native CephFS client access. Scale-out Storage Pools are highly-available and can utilize two or more metadata service instances (MDS) to load balance and scale locking performance.

- Create Cluster: Scale-out storage clusters deliver file, block and S3 object storage based and must be comprised of at least three (3) or more Storage Systems.

- Create OSD's & Journals: Devices must be assigned to the cluster before a scale-out NAS-based storage pool can be provisioned. Systems using HDDs must also have some SSDs in each system to accelerate write performance and metadata access via Journal Groups.

- Add MDS: Two or more scale-out file-system Meta-data Service (MDS) instances must be allocated to collectively manage file locking.

- Create File Storage Pool: Create a new scale-out Storage Pool to provide distributed NFS, SMB, and native CephFS access to Network Shares within the pool.

- Create Share: Create one or more Network Shares to deliver file storage via the NFS and SMB protocols.

Scale-out block Storage Pools provide highly-available block storage via the iSCSI, Fibre-Channel, and NVMeoF protocols as well as via the native Ceph RBD protocol.

- Setup Scale-out Block Pool: Scale-out block Storage Pools provide highly-available block storage via the iSCSI, Fibre-Channel, and NVMeoF protocols as well as via the native Ceph RBD protocol.

- Create Cluster: Scale-out storage clusters deliver file, block and S3 object storage and must be comprised of at least (3) or more Storage Systems.

- Create OSD's & Journals: Devices must be assigned to the cluster before Storage Pools can be provisioned. Systems using HDDs must also have some SSDs in each system to accelerate write performance and metadata access via Journal Groups.

- Create Pool: Create a scale-out block Storage Pool for provisioning iSCSI/FC/NVMeoF accessible Storage Volumes which are accessible to client hosts via all nodes of the cluster.

- Create Storage Volume: Create one or more Storage Volumes from the scale-out block Storage Pool.

Provisioning Setup

Network Shares may be accessed via both the NFS and the SMB file storage protocols. A Storage Pool (scale-up or scale-out) must be created before Network Shares may be provisioned.

- Create Share: Create a Network Share in a Storage Pool to provide users with file storage via the SMB and NFS protocols.

- Add NFS Access: Each network share may have one or more NFS client access entry. Each entry specifies access via IP address or subnet which is allowed access to a given network share via the NFS protocol. The default [public] entry provides NFS access via all IP addresses.

- Modify Network Share: Modify the network share to assign access to specific users and to tune the share for a specific workload by adjusting the record size and compression mode.

Block storage devices are referred to as Storage Volumes. Storage Volumes may be accessed via both the iSCSI and the FC protocol on systems with the required hardware. Since Storage Volumes are provisioned from Storage Pools, a pool (ZFS or Ceph) must be created first.

- Provision Block Storage: Block storage devices are referred to as Storage Volumes. Storage Volumes may be accessed via both the iSCSI and the FC protocol on systems with the required hardware. Since Storage Volumes are provisioned from Storage Pools, a pool (ZFS or Ceph) must be created first.

- Create Storage Volume: Block storage devices are referred to as Storage Volumes (aka LUNs) and can be accessed via iSCSI, FC, and NVMeoF protocols. Storage Volume(s) must be assigned to one or more Hosts before they may be accessed.

- Add Host: Add one or more Host entries (servers, VMs, etc) with their associated initiator IQN, NQN, or FC WWPNs so that one or more Storage Volumes can be assigned to them.

- Assign Volumes: Once a Storage Volume has been provisioned, it must be assigned to one or more Hosts and/or Host Groups in order for it to be accessed.

Mics Setup

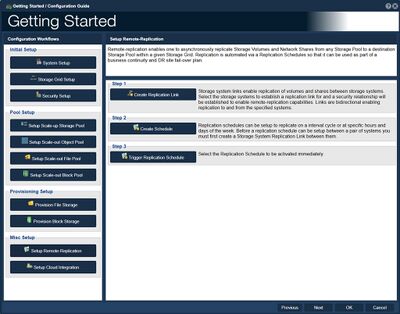

- Setup Remote-Replication: Remote-replication enables one to asynchronously replicate Storage Volumes and Network Shares from any Storage Pool to a destination Storage Pool within a given Storage Grid. Replication is automated via a Replication Schedules so that it can be used as part of a business continuity and DR site fail-over plan.

- Create Replication Link: Storage system links enable replication of volumes and shares between storage systems. Select the storage systems to establish a replication link for and a security relationship will be established to enable remote-replication capabilities. Links are bidirectional enabling replication to and from the specified systems.

- Create Schedule: Replication schedules can be setup to replicate on a interval cycle or at specific hours and days of the week. Before a replication schedule can be setup between a pair of systems you must first create a Storage System Replication Link between them.

- Trigger Replication Schedule: Select the Replication Schedule to be activated immediately.

Storage Systems can be connected to one or more major public cloud providers to provide local NAS gateway access to object storage and a means of automated backup and tiering via Backup Policies.

- Setup Cloud Integration: Storage Systems can be connected to one or more major public cloud providers to provide local NAS gateway access to object storage and a means of automated backup and tiering via Backup Policies.

- Add Cloud Provider Credential: Add the access key for one or more of your cloud storage accounts so that new Cloud Storage Containers can be created or imported.

- Add/Import Cloud Storage Container: Import existing object storage from your Cloud Provider to make it accessible as a Network Share via the NFS and SMB protocols.

- Create Cloud Storage Container: Creates a new bucket/container in the cloud and maps that to a new Cloud Storage Container.

- Create Backup Policy: Create a Backup Policy that will copy or move data into the cloud for you automatically.

For Additional Information... Getting Started Overview