Template:SetupStep Warning Before Creating OSDs Journals: Difference between revisions

Jump to navigation

Jump to search

Created page with "== Note before Creating the OSDs and Journals == The Object Storage Daemons (OSDs) store data, while the Journals accelerate write performance as all writes flow to the journ..." |

|||

| Line 1: | Line 1: | ||

== Note | == Note Before Creating OSDs and Ceph Journals == | ||

The Object Storage Daemons (OSDs) store data, while the Journals accelerate write performance as all writes flow to the journal and are complete from the client perspective once the journal write is complete. A quick review of OSD and Journal requirements in a QuantaStor/Ceph Scale-out Block and Object configuration: | The Object Storage Daemons (OSDs) store data, while the Journals accelerate write performance as all writes flow to the journal and are complete from the client perspective once the journal write is complete. A quick review of OSD and Journal requirements in a QuantaStor/Ceph Scale-out Block and Object configuration: | ||

Revision as of 00:01, 19 March 2016

Note Before Creating OSDs and Ceph Journals

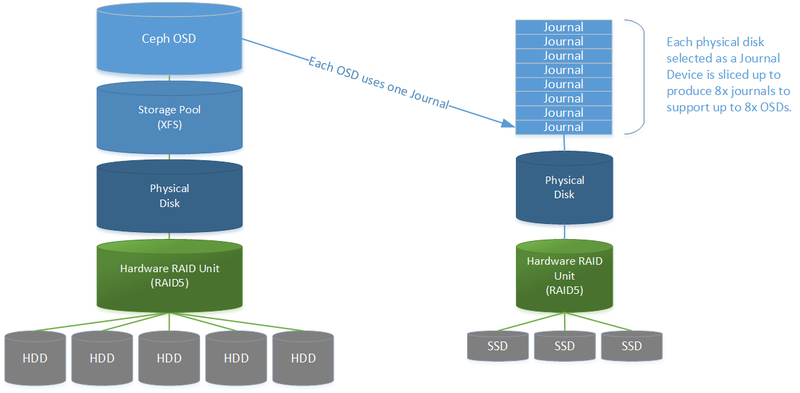

The Object Storage Daemons (OSDs) store data, while the Journals accelerate write performance as all writes flow to the journal and are complete from the client perspective once the journal write is complete. A quick review of OSD and Journal requirements in a QuantaStor/Ceph Scale-out Block and Object configuration:

- QuanaStor maps OSDs to XFS Storage Pools on a one-to-one basis

- Journals map to a partition on a Physical Device like a SSD or NVMe device

- Each appliance should have at least 3 Storage Pools/OSDs to satisfy minimum Placement Group redundancy requirements, with between 3x to 25x OSD per Appliance (15x to 125x disks in RAID5 units)

- 1x SSD Journal device is needed for every 8x OSDs (with 4x OSDs per Journal the optimal maximum)

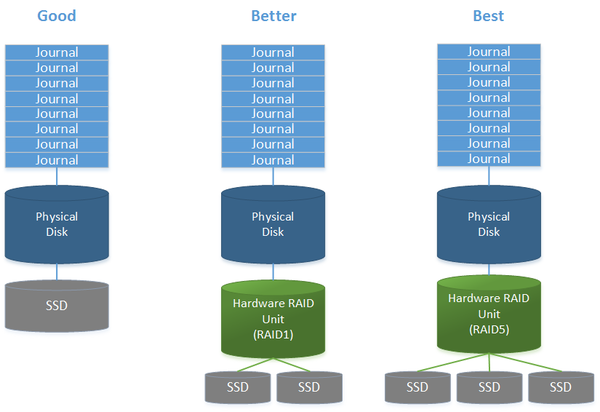

While stand-alone SSD devices can be used as Journal Devices, in practice it's better to use hardware RAID to protect and accelerate the journal device. Using RAID1 with two SSDs will make it easy to maintain with no downtime for the node. Going further, using RAID5 will boost performance and provide fault tolerance for a relatively small increase in hardware cost for a large boost in write performance.