Difference between revisions of "Object Storage Setup"

m (→Object Storage Setup (S3/SWIFT)) |

m (→Create S3 Bucket) |

||

| (87 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

[[Category:start_guide]] | [[Category:start_guide]] | ||

| + | |||

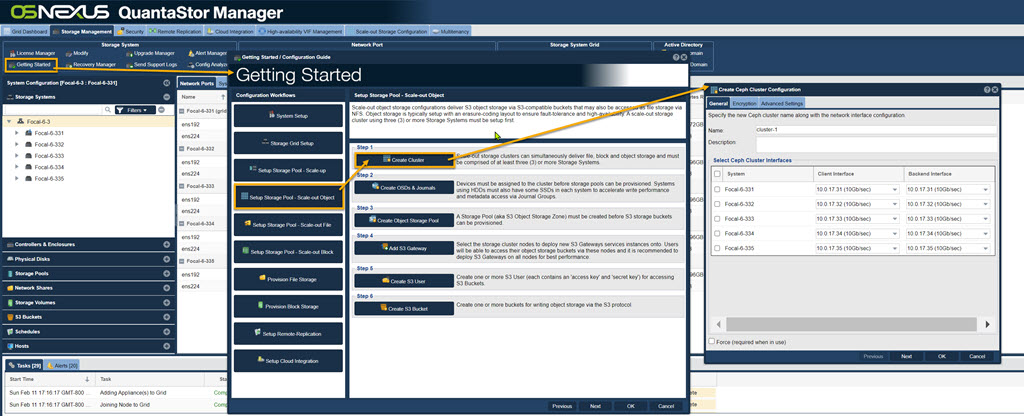

| + | The dialogs for each step can be found through the Getting Started dialog or from toolbar dialogs. I will demonstrate each path in the sections below. We recommend the Getting Started path for initial creation since it walks you through the proper order of installation. | ||

| + | |||

| + | ''' OSNEXUS Videos ''' | ||

| + | * [[Image:youtube_icon.png|50px|link=https://www.youtube.com/watch?v=VpfcjZDO3Ys]] [https://www.youtube.com/watch?v=VpfcjZDO3Ys Covers Designing Ceph clusters with the QuantaStor solution design web app [17:44]] | ||

| + | |||

| + | * [[Image:youtube_icon.png|50px|link=https://www.youtube.com/watch?v=oxSJLoqOVGA]] [https://www.youtube.com/watch?v=oxSJLoqOVGA Covers QuantaStor 6: Deploying a Ceph-based S3 Object Storage Cluster in minutes. [19:16]] | ||

| + | |||

| + | |||

=== Create Object Storage Cluster === | === Create Object Storage Cluster === | ||

| − | Scale-out S3/SWIFT | + | QuantaStor leverages open storage Ceph technology to deliver Scale-out Object Storage (S3/SWIFT) and Scale-out Block Storage (iSCSI). Before provisioning scale-out storage solutions, one must set up one or more Ceph Clusters within a QuantaStor storage grid. Ceph Clusters should be comprised of at least three (3) or more Storage Systems within the grid. It is possible to create a single-node Ceph Cluster, but these are recommended only for test configurations. |

| + | Note also that one should set up the network ports on each System so that a dedicated high speed back-end network may be used for inter-node communication. This back-end network is recommended to have double the bandwidth of the front-end network. Both the front-end and back-end network ports should bonded ports for improved performance and high-availability. The same network may be used for the front-end and back-end network but this is not optimal. | ||

| − | [[File: | + | [[File:Get Started Create Clstr.jpg|1024px]] |

| + | |||

| + | ''- or -'' | ||

| + | |||

| + | [[File:Create Ceph Cluster 6.jpg|1024px]] | ||

===Select Data Devices === | ===Select Data Devices === | ||

| − | Scale-out Storage Pools and Zones store their data in object storage devices called | + | Scale-out Storage Pools and Zones store their data in object storage devices called '''OSD'''s ('''O'''bject '''S'''torage '''D'''aemon). Each Physical Disk assigned to the Ceph Cluster as a data device becomes an OSD. To accelerate write performance each System must have at least one SSD device assigned as a '''WAL''' ('''W'''rite-'''A'''head '''L'''ogging) Device. All writes are logged to an OSD's associated WAL Device first. Press the button to the left to add OSDs and WAL devices to the Ceph Cluster. |

| + | Note, using the "Auto Config" button, highlighted in red, makes the proper selections for you. | ||

| + | |||

| + | |||

| + | [[File:Get Started Create OSD.jpg|1024px]] | ||

| + | |||

| + | ''- or -'' | ||

| + | |||

| + | [[File:Create OSDs & Journals Web 6.jpg|1024px]] | ||

| + | |||

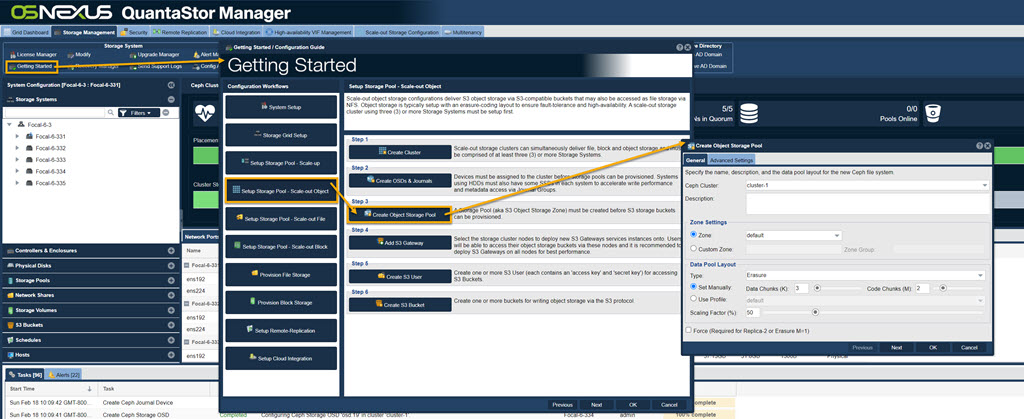

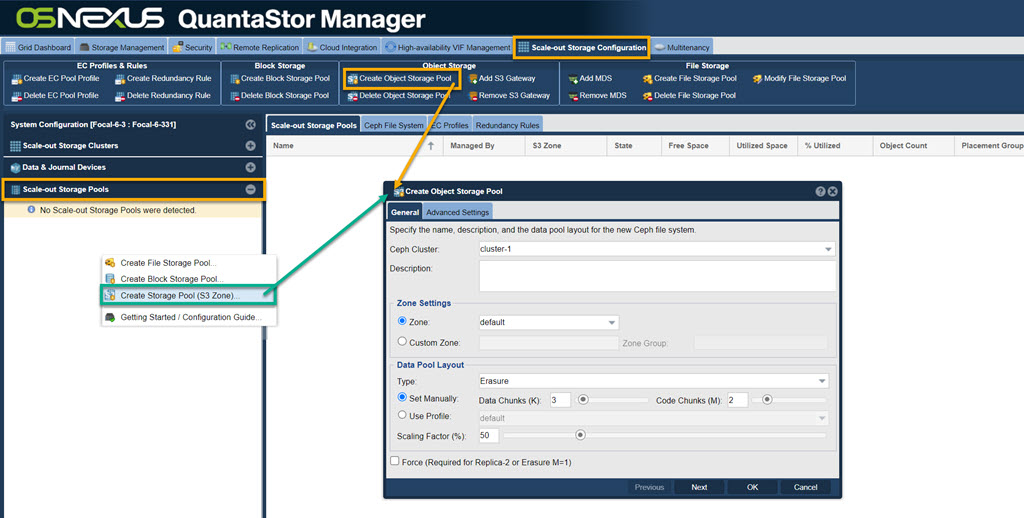

| + | === Create S3 Object Storage Zone === | ||

| + | |||

| + | To access the cluster via the S3 object storage protocols a Zone must be created. Press the button "Create Object Storage Pool" to create an S3 object storage pool and a zone within the cluster. | ||

| + | |||

| + | |||

| + | [[File:Get Start Create Obj Strg Pool.jpg|1024px]] | ||

| + | |||

| + | ''- or - '' | ||

| + | |||

| + | [[File:Create Obj Strg Pool.jpg|1024px]] | ||

| + | |||

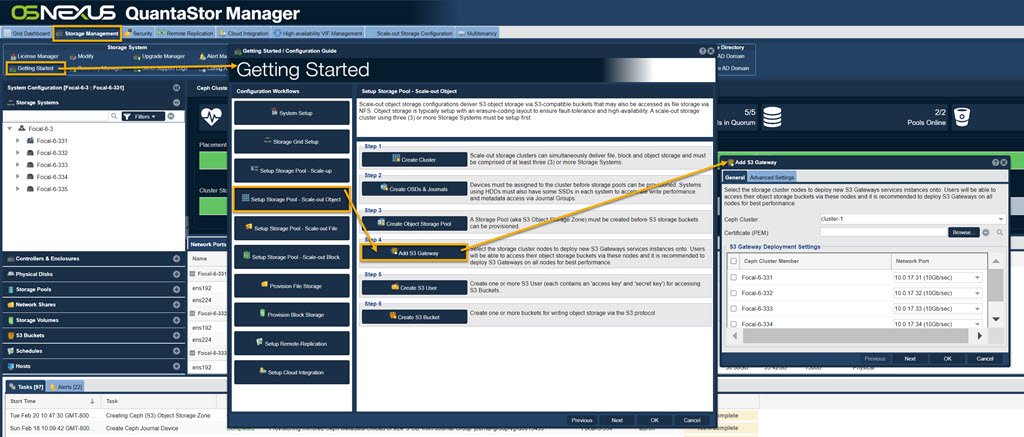

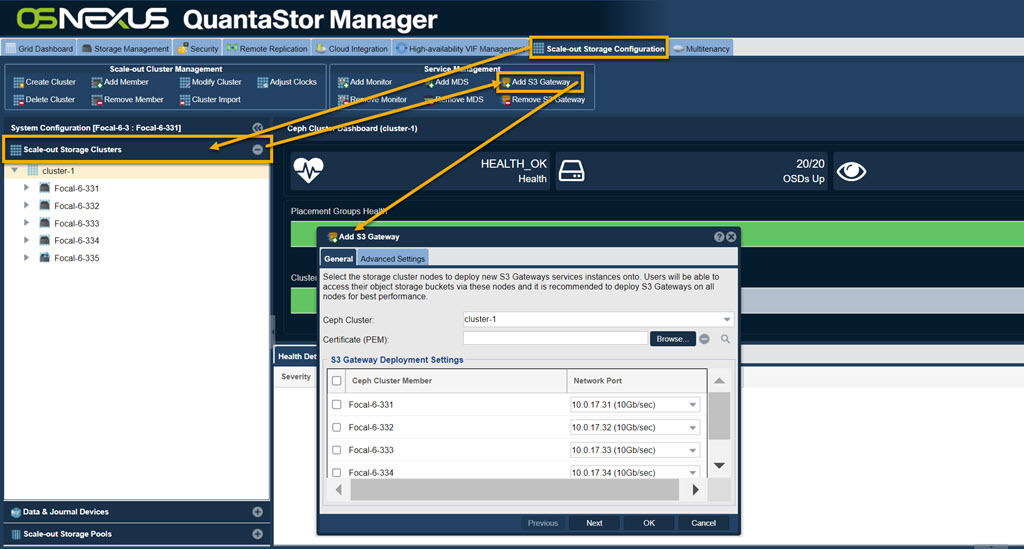

| + | === Add S3 Gateways === | ||

| + | Select the Ceph Cluster to add a new S3 Gateway to and specify the network interface configuration. | ||

| − | |||

| − | + | [[File:Get Start Add S3 Gateway.jpg|1024px]] | |

| − | + | ''- or -'' | |

| + | [[File:Add S3 Gateway Web Page.jpg|1024px]] | ||

| − | + | === Add S3 Users === | |

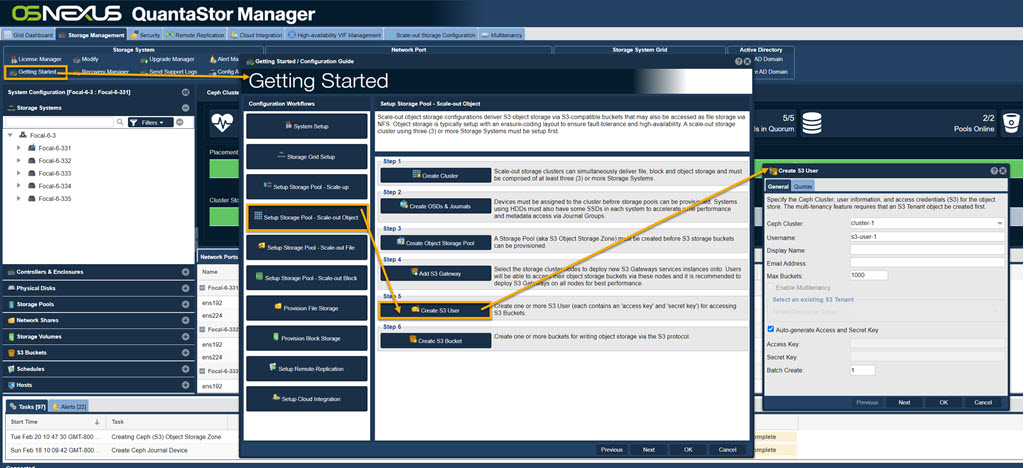

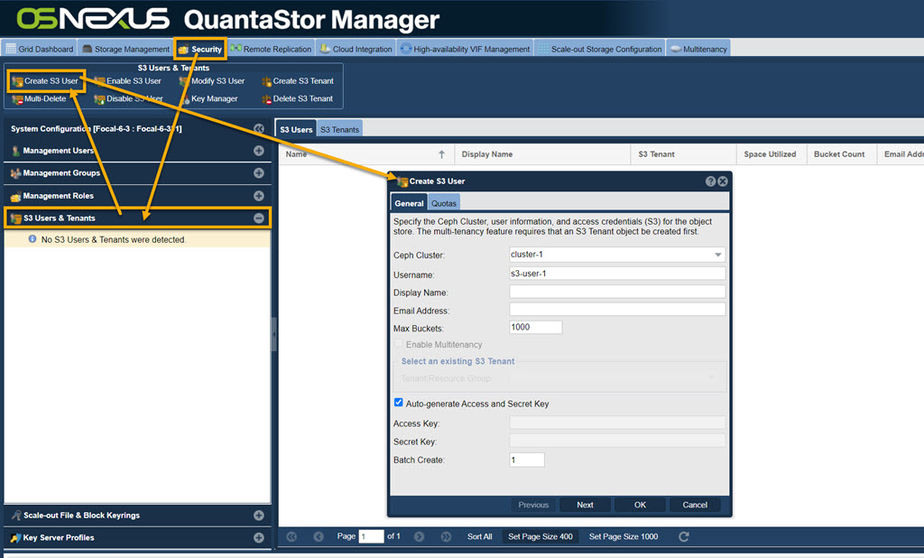

| − | + | After the Zone has been created users must be given an access key and secret key in order to create buckets and objects via the S3 protocols. Press the button to the left to add a S3 Object User Access Entry to the Zone. | |

| − | |||

| + | [[File:Get Start Create S3 User.jpg|1024px]] | ||

| − | + | ''-- or --'' | |

| − | + | [[File:Create S3 User-Web.jpg|924px]] | |

| − | + | === Create S3 Bucket === | |

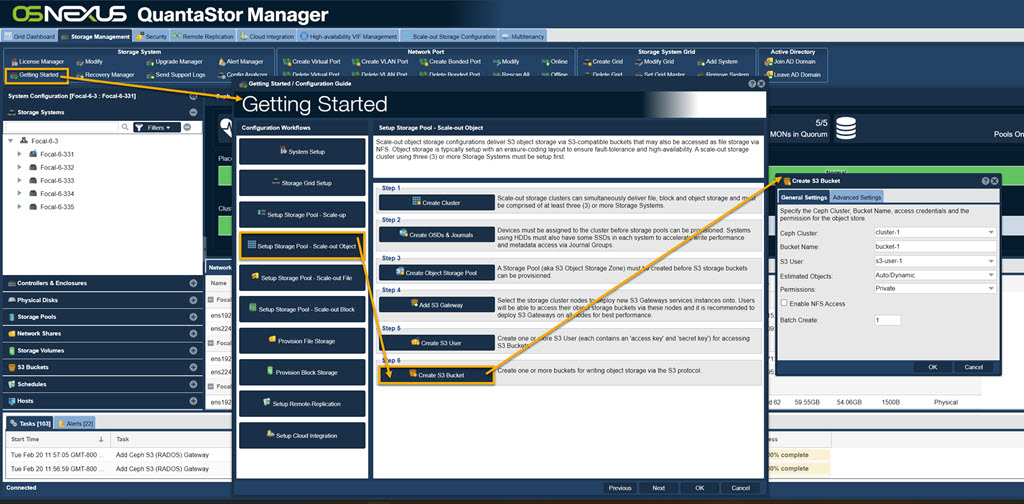

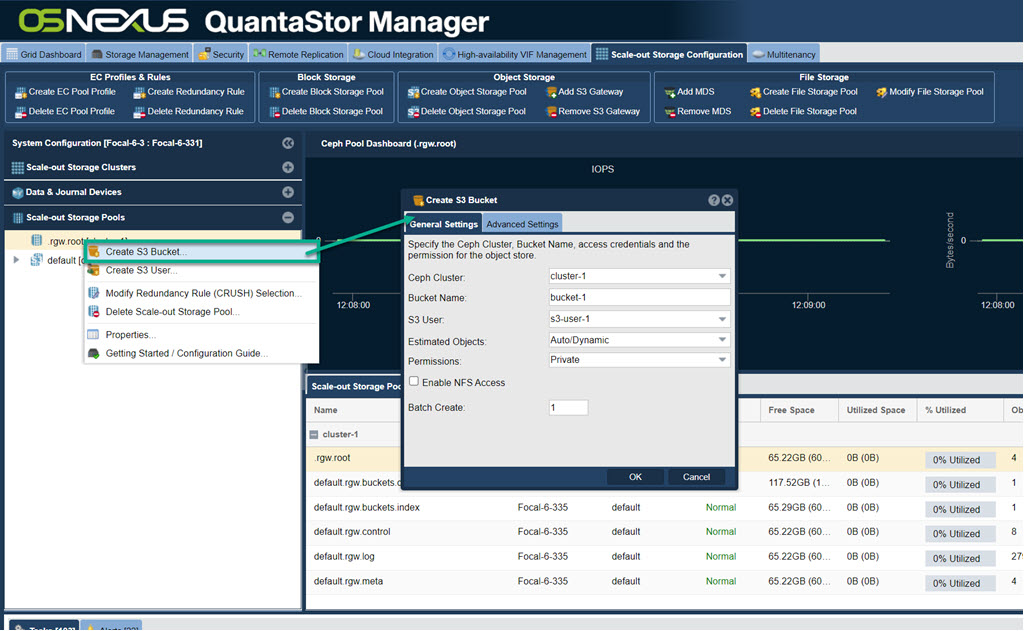

| + | Create one or more buckets for writing object storage via the S3 protocol. | ||

| − | [[File: | + | [[File:Get Start Create Bucket.jpg|1024px]] |

| + | ''-- or --'' | ||

| + | [[File:Create S3 Bckt.jpg|1024px]] | ||

{{Template:ReturnToWebGuide}} | {{Template:ReturnToWebGuide}} | ||

[[Category:WebUI Dialog]] | [[Category:WebUI Dialog]] | ||

| − | [[Category: | + | [[Category:QuantaStor6]] |

[[Category:Requires Review]] | [[Category:Requires Review]] | ||

Latest revision as of 13:05, 20 February 2024

The dialogs for each step can be found through the Getting Started dialog or from toolbar dialogs. I will demonstrate each path in the sections below. We recommend the Getting Started path for initial creation since it walks you through the proper order of installation.

OSNEXUS Videos

Contents

Create Object Storage Cluster

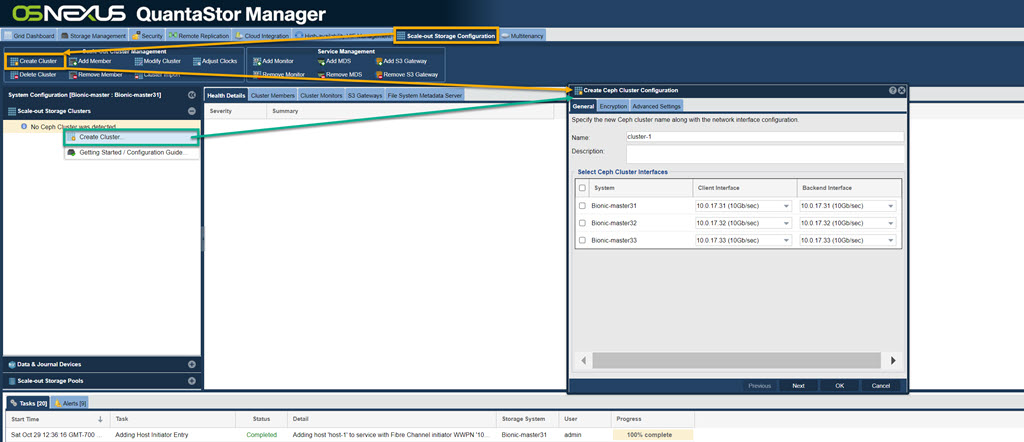

QuantaStor leverages open storage Ceph technology to deliver Scale-out Object Storage (S3/SWIFT) and Scale-out Block Storage (iSCSI). Before provisioning scale-out storage solutions, one must set up one or more Ceph Clusters within a QuantaStor storage grid. Ceph Clusters should be comprised of at least three (3) or more Storage Systems within the grid. It is possible to create a single-node Ceph Cluster, but these are recommended only for test configurations.

Note also that one should set up the network ports on each System so that a dedicated high speed back-end network may be used for inter-node communication. This back-end network is recommended to have double the bandwidth of the front-end network. Both the front-end and back-end network ports should bonded ports for improved performance and high-availability. The same network may be used for the front-end and back-end network but this is not optimal.

- or -

Select Data Devices

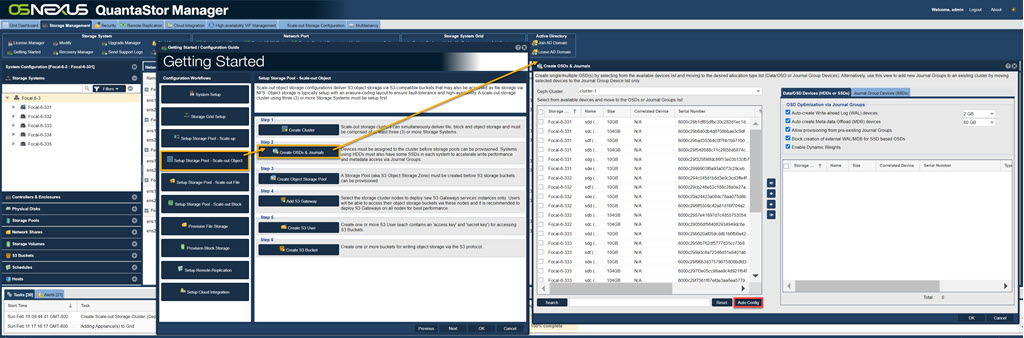

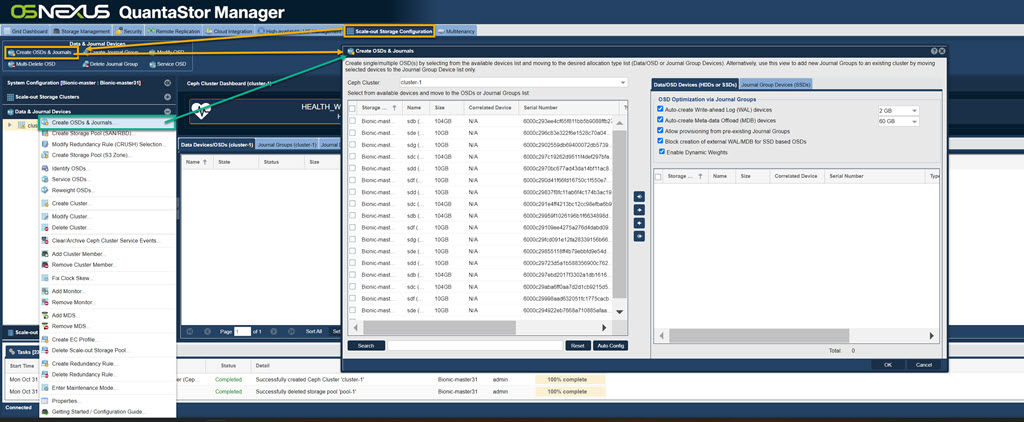

Scale-out Storage Pools and Zones store their data in object storage devices called OSDs (Object Storage Daemon). Each Physical Disk assigned to the Ceph Cluster as a data device becomes an OSD. To accelerate write performance each System must have at least one SSD device assigned as a WAL (Write-Ahead Logging) Device. All writes are logged to an OSD's associated WAL Device first. Press the button to the left to add OSDs and WAL devices to the Ceph Cluster. Note, using the "Auto Config" button, highlighted in red, makes the proper selections for you.

- or -

Create S3 Object Storage Zone

To access the cluster via the S3 object storage protocols a Zone must be created. Press the button "Create Object Storage Pool" to create an S3 object storage pool and a zone within the cluster.

- or -

Add S3 Gateways

Select the Ceph Cluster to add a new S3 Gateway to and specify the network interface configuration.

- or -

Add S3 Users

After the Zone has been created users must be given an access key and secret key in order to create buckets and objects via the S3 protocols. Press the button to the left to add a S3 Object User Access Entry to the Zone.

-- or --

Create S3 Bucket

Create one or more buckets for writing object storage via the S3 protocol.

-- or --