Scale-out Storage Configuration Section: Difference between revisions

| Line 38: | Line 38: | ||

=== Scale-out File (CephFS) Management === | === Scale-out File (CephFS) Management === | ||

=== Metadata Server (MDS) Management === | ==== Metadata Server (MDS) Management ==== | ||

=== Scale-out Block (CephRBD) Management === | === Scale-out Block (CephRBD) Management === | ||

Revision as of 19:12, 22 August 2019

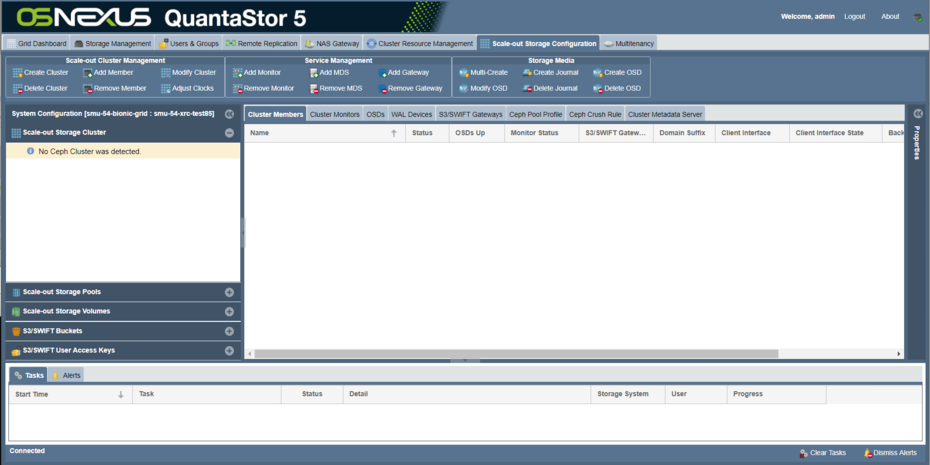

The Scale-out Storage Configuration section of the web management interface is where setup and configuration Ceph clusters is done. In this section one may create clusters, configure OSDs & journals, and allocate pools for file, block, and object storage access.

Cluster Management

QuantaStor makes creation of scale-out clusters a simple one dialog operation where the servers are selected along with the front-end & back-end network ports for cluster communication.

Monitor Management

QuantaStor automatically configures three servers as Ceph Monitors when the cluster is created. Additional monitors may be added or removed but all clusters require a minimum of three monitors.

OSD & Journal Management

OSDs and Journal devices are all based on the BlueStore storage backend. QuantaStor also has support for existing systems with FileStore based OSDs but new OSDs are always configured to use BlueStore.

- Automated OSD and Journal/WAL Device Setup / Multi-OSD Create

- Create a single OSD

- Delete an OSD

- Create a WAL/Journal Device

- Delete a WAL/Journal Device

Scale-out S3 Gateway (CephRGW) Management

- S3 Object Storage Setup

- Add S3 Gateway (RGW) to Cluster Member

- Remove S3 Gateway (RGW) from Cluster Member